Keeping up with an industry as fast-moving as AI is a serious challenge. Until an AI can do it for you, here's a handy roundup of the newest stories from the world of machine learning, in addition to notable research and experiments that we haven't covered alone.

In AI this week, Google paused the flexibility of its AI chatbot Gemini to generate images of individuals after a portion of users complained about historical inaccuracies. Gemini, for instance, is meant to represent “a Roman legion” and depicts an anachronistic, cartoonish group of foot soldiers of various races, while “Zulu warriors” are black.

It appears that Google – like another AI vendors, including OpenAI – has implemented some clumsy hardcoding under the hood to attempt to “correct” biases in its model. In response to requests comparable to “Only show me pictures of girls” or “Only show me pictures of men,” twins responded by saying that such images could “contribute to the exclusion and marginalization of other genders.” Gemini also objected to creating images of individuals identified only by their race – e.g. B. “white people” or “black people” – out of alleged concern about “reducing individuals to their physical characteristics”.

The right has seized on the mistakes as evidence of a “woke” agenda by the tech elite. But it doesn't take Occam's razor to see the less shameful truth: Google, burned by the biases of its tools before (see: Classifying black men as gorillasMixing up heat guns within the hands of black people as weaponsetc.) is so desperate to stop history from repeating itself that it expresses a less biased world in its image-generating models – nonetheless flawed they could be.

In her bestselling book, White Fragility, anti-racist educator Robin DiAngelo writes about how racial erasure—colorblindness, in one other term—contributes to, fairly than mitigates or ameliorates, systemic power imbalances between races. By pretending to “not see color” or reinforcing the concept that simply acknowledging the struggles of individuals of other races is sufficient to call oneself “woke,” individuals are doing harm by eliminating any substantive engagement with the difficulty avoid, says DiAngelo.

Google's careful approach to race-based prompts in Gemini hasn't avoided the issue per se — but fairly has disingenuously attempted to cover the model's worst biases. One could argue (and plenty of have done so), that these biases mustn’t be ignored or glossed over, but ought to be addressed within the broader context of the training data from which they arise – that’s, society on the World Wide Web.

Yes, the datasets used to coach image generators generally contain more white people than black people, and yes, the pictures of black people in these datasets reinforce negative stereotypes. Hence image generators sexualize certain women of color, depict white men in positions of authority and customarily support it wealthy western perspectives.

Some may argue that there isn’t a win for AI providers. Whether they address or fail to deal with models' biases, they face criticism. And that's true. But my guess is that in any case these models lack explanations – they’re packaged in a way that minimizes the best way through which their biases manifest themselves.

If AI vendors addressed the shortcomings of their models directly and in modest and transparent language, it could go much further than arbitrary attempts to “fix” an essentially uncorrectable bias. The truth is that we’re all biased – and that's why we don't treat people equally. Neither do the models we construct. And we’d do well to acknowledge that.

Here are another notable AI stories from recent days:

- Women in AI: TechCrunch has launched a series highlighting notable women in AI. Read the list here.

- Stable Diffusion v3: Stability AI has announced Stable Diffusion 3, the newest and strongest version of the corporate's image-generating AI model, based on a brand new architecture.

- Chrome gets GenAI: Google's latest Gemini-based tool in Chrome allows users to rewrite existing text on the net – or generate something completely latest.

- Blacker than ChatGPT: Creative promoting agency McKinney has developed a quiz game called “Are You Blacker than ChatGPT?” to make clear AI bias.

- Demands for laws: Hundreds of AI luminaries signed a public letter earlier this week calling for anti-deepfake laws within the US

- Match created in AI: OpenAI has a brand new customer in Match Group, the owner of apps like Hinge, Tinder and Match, whose employees will use OpenAI's AI technology to finish work-related tasks.

- DeepMind Security: DeepMind, Google's AI research division, has launched a brand new organization called AI Safety and Alignment, made up of existing teams working on AI safety but in addition expanded to incorporate latest, specialized cohorts of GenAI researchers and -Involve engineers.

- Open models: Barely per week after launching the newest version of its Gemini models, Google released Gemma, a brand new family of lightweight, open models.

- House of Representatives Task Force: The US House of Representatives has created a task force on AI, which, as Devin writes, looks like a punt after years of indecision that shows no sign of ending.

More machine learning

AI models appear to know quite a bit, but what do they really know? Well, the reply is nothing. But should you phrase the query slightly otherwise, they appear to have internalized some “meanings” which are much like what humans know. Although no AI truly understands what a cat or a dog is, could it have a way of similarity within the embedding of those two words that’s different from, say, cat and bottle? Amazon researchers consider so.

Their research compared the “trajectories” of comparable but different sentences comparable to “The dog barked on the burglar” and “The burglar made the dog bark” with those of grammatically similar but different sentences comparable to “A cat sleeps all day.” and “A lady jogs all afternoon.” They found that those that people would find similar were actually internally treated as more similar despite being grammatically different, and vice versa for the grammatically similar. OK, I believe this paragraph was a bit confusing, but suffice it to say that the meanings encoded in LLMs appear to be more robust and complicated than expected, and never entirely naive.

Neural coding proves useful in prosthetic vision. Swiss researchers at EPFL have came upon. Artificial retinas and other options for replacing parts of the human visual system generally have very limited resolution on account of the constraints of microelectrode arrays. No matter how detailed the image is, it have to be transmitted with a really low fidelity. However, there are alternative ways to downsample, and this team has found that machine learning does an important job at it.

Photo credit: EPFL

“We found that using a learning-based approach gave us higher results by way of optimized sensory encoding. But much more surprising was that once we used an unconstrained neural network, it learned to mimic features of retinal processing by itself,” Diego Ghezzi said in a press release. Basically, it performs perceptual compression. They tested it on the retinas of mice, so it's not only theoretical.

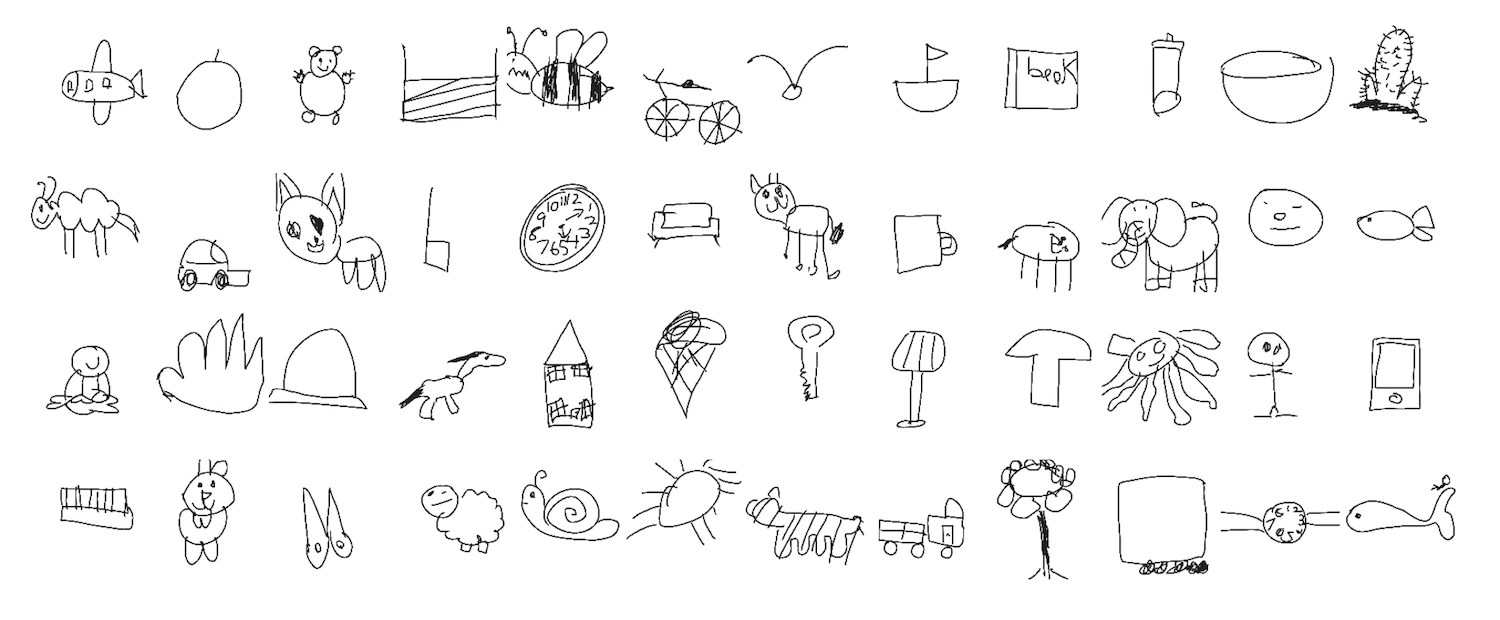

An interesting application of computer vision by Stanford researchers points to a mystery about how children develop their drawing skills. The team surveyed and analyzed 37,000 children's drawings of varied objects and animals and in addition analyzed (based on the youngsters's responses) how recognizable each drawing was. Interestingly, it wasn't just the inclusion of distinctive features like bunny ears that made the drawings easier to acknowledge by other children.

“The sorts of features that make older children's drawings recognizable don’t look like determined by only a single feature that each one older children learn to incorporate of their drawings. It’s something way more complex that these machine learning systems are picking up on,” said lead researcher Judith Fan.

Chemist (also at EPFL). that LLMs are also surprisingly adept at helping out with their work after minimal training. It's not nearly doing chemistry directly, but fairly about orienting yourself to a fancy of labor that a chemist as a person cannot possibly know all the pieces about. For example, in 1000’s of publications there could also be a couple of hundred statements about whether a high entropy alloy is single phase or polyphase (you don't have to know what meaning – they do). The system (based on GPT-3) may be trained on most of these yes/no questions and answers and can soon find a way to extrapolate from them.

This isn't an enormous step forward, just further evidence that LLMs are a great tool on this sense. “The point is that this is so simple as a literature search, which works for a lot of chemical problems,” said researcher Berend Smit. “Querying a basic model could turn out to be a routine option to start a project.”

Last, A word of caution from Berkeley researchers, although now that I re-read the post I see that EPFL was also involved. Go to Lausanne! The group found that images found through Google were way more more likely to implement gender stereotypes for certain jobs and words than text mentioning the identical thing. And in each cases there have been simply quite a bit more men present.

In addition, they present in an experiment that folks who checked out pictures as an alternative of reading text when researching a task were more reliable at assigning these roles to a gender even days later. “This just isn’t just concerning the prevalence of gender bias online,” said researcher Douglas Guilbeault. “Part of the story here is that there’s something very sticky, very powerful about depicting people in pictures that text just doesn’t have.”

With things just like the Google Image Generator diversity dispute, it's easy to lose sight of the well-established and widely acknowledged incontrovertible fact that the information source for a lot of AI models has serious biases, and people biases have real impacts on people.