Researchers at Meta and the University of California San Diego (UCSD) have developed ToolVerifier, a way that improves the best way LLMs access and interact with software tools.

In order for LLMs to develop into useful as general assistants or agents, they need to be taught use various tools or APIs. While fine-tuning an LLM to make use of a selected tool works, the actual challenge is getting an LLM to interact with recent tools without the necessity for fine-tuning or few-step demonstrations.

When two tools are very similar, it might be particularly difficult for the LLM to decide on the precise one to realize their goal. The current practice of providing just a few examples for every tool may also take up a big portion of the context window available to an LLM.

ToolVerifier is a self-verification method that enables the LLM to ask itself questions to search out out which tool to make use of and what parameters to pass to the tool.

To support the LLM, ToolVerifier first selects probably the most suitable tool from an options library after which generates the corresponding parameters. Each of those steps generates questions to guage the choice and differentiate between similar candidate tools.

Here is an example from the research work showing the strategy of tool selection and parameter clarification.

ToolVerifier was trained on data consisting of a listing of synthetic tools, including travel, banking, and calendar tools, and their associated descriptions. Training was given to pick the suitable tool based on the title and outline alone.

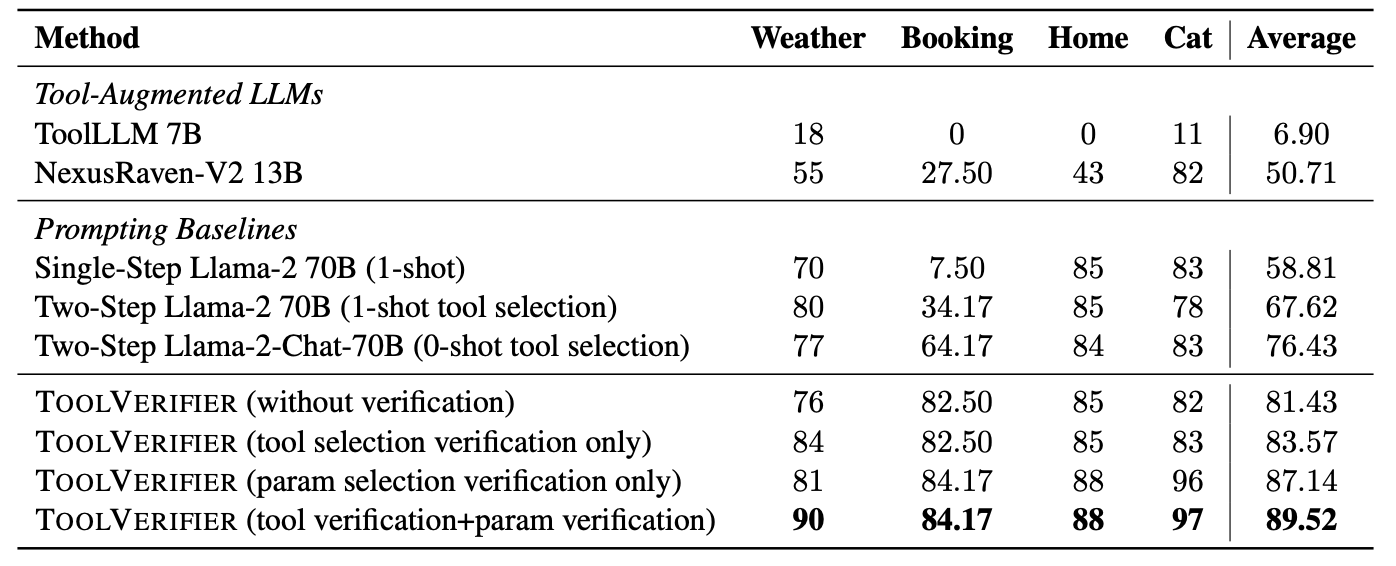

After the researchers were trained in tool selection and parameter verification, they tested ToolVerifier with 4 tasks from the ToolBench benchmark, which required Llama 2-70B to interact with 17 previously unseen tools.

The results published within the newspaper say that using the ToolVerifier method resulted in “a median improvement of twenty-two% over low-shot baselines, even in scenarios where the differences between the tools in query are finely nuanced.”

The results show that ToolVerifier significantly improves the tool selection and accurate parameter generation of an LLM. The method has only been trained and tested to interact with a single tool and never to interact with multiple tools, but remains to be promising.

Tool-enhanced LLMs are an exciting development in using AI as a generalized agent. Once LLMs learn to make use of multiple tools to realize a goal, they can be much more useful to us than they already are.

The future where an AI assistant will book a flight, coordinate a gathering, or do your grocery looking for you doesn't seem distant.