A lady's incident misidentified by facial recognition technology in a Rotorua supermarket shouldn't have been a surprise.

When Foodstuffs North Island announced its intention to check this technology in February as a part of a technique to combat retail crime, technology and privacy experts immediately raised concerns.

Particularly the danger for Māori women and girls with dark skin to be discriminated was raised and has now been confirmed by what happened to Te Ani Solomon in early April.

Speaking to the media this week: Said Solomon She thought ethnicity was a “big factor” in her misidentification. “Unfortunately, if we don't have rules and regulations around this, that will likely be the experience of many Kiwis.”

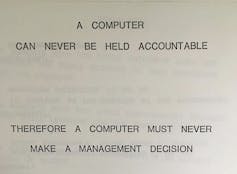

That of the supermarket company Answer That this was a “real case of human error” doesn’t address the deeper questions surrounding such use of AI and automatic systems.

Automated decisions and human actions

Automated facial recognition is commonly discussed within the abstract – as purely algorithmic pattern matching, with an emphasis on assessing correctness and accuracy.

These are rightly vital priorities for systems coping with biometric data and security. But with such a critical give attention to the outcomes of automated decisions, it is straightforward to overlook concerns about how those decisions are implemented.

Designers use the term “context of use” to explain the on a regular basis working conditions, tasks and goals of a product. When it involves facial recognition technology in supermarkets, the context of use goes far beyond traditional design points corresponding to ergonomics or usability.

Consideration should be given to how automated trespass notifications trigger store responses, what protocols are in place to administer those responses, and what happens if something goes flawed. These are greater than just technology or data issues.

This perspective helps us to know and weigh the consequences of technical and design interventions at different levels of a system.

Investing in improving predictive accuracy appears to be an obvious priority for facial recognition systems. However, this must be seen in a broader usage context, where the damage attributable to a small variety of false predictions outweighs the minor performance improvements elsewhere.

Retail Crime Response

New Zealand isn't alone in reporting an increase in shoplifting and violent behavior in stores. It has been described as a “crisis” within the UK, with an assault on a retail employee now occurring independent crime.

It's the Canadian police Provision of additional resources involved in “shoplifting raid.” And in California, it's retail giants Walmart and Target push for higher penalties for retail crime.

While these issues have been linked to the rising cost of living, the industry group said Retail NZ has pointed this out The primary factor is profit-oriented organized crime.

The high-profile reporting of brazen thefts and assaults in stores using security footage undoubtedly influences public perception. However, a trend is difficult to measure due to the lack of consistent, unbiased data on shoplifting and offenders.

It is estimated that 15-20% of individuals in New Zealand experience food insecurity, an issue noticed, that strongly linked to ethnicity and socioeconomic position. The relationships between cost of living, food insecurity and the black market distribution of stolen food are prone to be complex and nuanced.

Therefore, caution is required when assessing cause and effect, as a shift towards constant surveillance in retail spaces brings risks of harm and impacts on civil society.

Getty Images

AI and human bias

It is commendable that Foodstuffs cooperated with the information protection officer and did so Transparency about protective measures in protocols for collecting and deleting biometric data. What’s missing is more clarity on store security response protocols.

This is about greater than just customer consent to facial recognition cameras. Customers also have to know what happens when a trespass notification is made and the dispute resolution process within the event of misidentification.

Research suggests that human decision makers can inherit biases from AI decisions. In situations of increased stress and increased risk of violence, combining automated facial recognition with ad hoc human judgment is potentially dangerous.

Instead of isolating individual employees or technology components and blaming them as single sources of failure, more emphasis should be placed on resilience and fault tolerance across all the system.

AI errors and human errors can’t be completely avoided. AI security protocols with “humans within the loop” require more careful safeguards that respect customers’ rights and protect against stereotypes.

Shopping and monitoring

Australian supermarkets have responded to retail crime with overt technological surveillance: body cameras have been distributed to staff (also now taken over from Woolworths in New Zealand), digital tracking of customer movement through stores, automated shopping cart locks and exit gates to forestall people from leaving the shop without paying.

WITH-CSAIL

Supermarkets could now be on the forefront of a technological shift within the shopping experience. The shift to a surveillance culture during which every customer is monitored as a possible thief is paying homage to the best way global airport security modified after 9/11.

New Zealand product designers, software engineers and data scientists will likely be following the Privacy Commissioner's findings closely Review of Foodstuffs' facial recognition experiment.

Theft and violence are a pressing problem for supermarkets. But they need to now reveal that digital surveillance systems are a more responsible, ethical and effective solution than possible alternative approaches.

This means embracing technology requires human-centered design to avoid misuse, bias, and harm. This, in turn, will help set regulatory frameworks and standards, stimulate public debate about the appropriate use of AI, and support the event of safer automated systems.