Key Takeaways:

- Compact Power: Phi-3 Mini showcases that smaller AI models can deliver significant performance without the resource demands of larger counterparts.

- On-Device Deployment: The ability to run on smartphones opens up newer and higher possibilities for AI applications in mobile contexts.

- Training Optimization: Innovative approaches to training data optimization enable the event of powerful small-scale AI models.

- Industry Trend: The rise of smaller, efficient AI models reflects a broader trend toward changing AI and making it more accessible across devices and applications

Microsoft has introduced Phi-3 Mini, a 3.8 billion parameter language model, marking the primary release in its Phi-3 series. This compact model may even challenge larger counterparts in performance while being optimized for resource efficiency.

Training Optimization:

Phi-3 Mini’s impressive capabilities stem from progressive training data optimization techniques. Researchers created a model that packs a punch despite its smaller size by filtering web data and incorporating synthetic data.

Performance Metrics:

Phi-3 Mini achieves impressive performance metrics, including 69% (nice) on the MMLU benchmark and eight.38 on the MT-bench, showcasing reasoning abilities comparable to larger models.

On-Device Deployment:

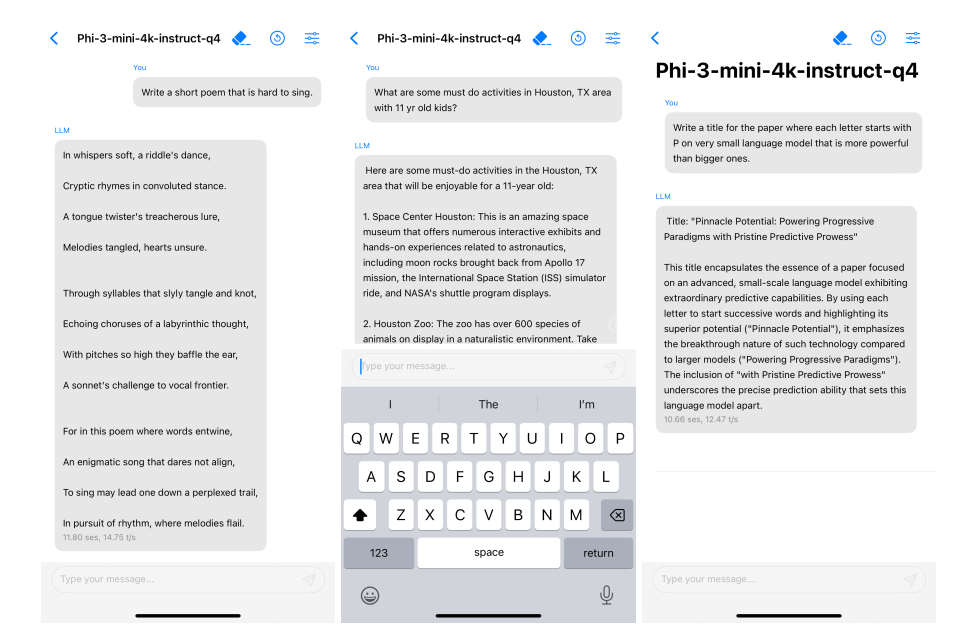

One of the standout features of Phi-3 Mini is its ability to run locally on smartphones. By quantizing the model to 4 bits, researchers reduced its memory footprint, allowing deployment on devices just like the iPhone 14 with an A16 Bionic chip.

Phi-3 Series Expansion:

Microsoft is planning to expand the Phi-3 series with two additional models: Phi-3 Small (7B parameters) and Phi-3 Medium (14B parameters). Early signs suggest these models will further expand the capabilities of small AI models.

Industry Trends:

The development of the Phi-3 Mini shows a broader trend within the AI industry towards creating smaller, more efficient models for deployment on diverse devices, not only overpriced, powerful machines.

Challenges and Mitigations:

Despite advancements, addressing issues reminiscent of bias and safety concerns stays difficult. However, techniques reminiscent of careful training data collection and targeted post-training measures have helped manage these issues.

Deployment Availability:

Phi-3 Mini is already available on Microsoft’s Azure cloud platform, model collaboration site Hugging Face, and AI model service Ollama, signaling its accessibility to developers and researchers.

In Conclusion:

Microsoft’s Phi-3 Mini is a compact AI language model that performs in addition to larger models while being resource-efficient. It can run on smartphones, opening up possibilities for mobile AI applications. Microsoft plans to expand the Phi-3 series with two more models. The development of smaller, efficient AI models shows a broader trend within the industry toward making AI more accessible across devices and applications.

Recommended Newsletters 🐝 🐝 🐝 🐝

Source: