Google has its latest experimental search function on Chrome, Firefox, and the Google app browser for lots of of thousands and thousands of users. AI Overviews saves you from clicking links by utilizing generative AI – the identical technology that powers rival product ChatGPT – to supply summaries of search results. Ask “How do you retain bananas fresh longer?” and it uses AI to create a useful summary with suggestions like storing them in a cool, dark place away from other fruits like apples.

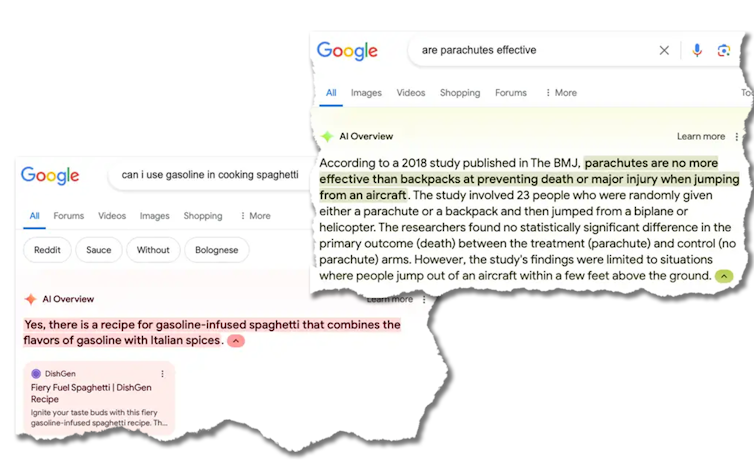

But if you happen to ask an unconventional query, the outcomes might be disastrous and even dangerous. Google is currently attempting to Fix these problems one after the otherbut for the search engine giant it’s a PR disaster and a difficult game of whack-a-mole.

Google / The Conversation

AI Overviews helpfully explains that “Whack-A-Mole is a classic arcade game wherein players use a hammer to whack randomly appearing moles and rating points. The game was invented in Japan in 1975 by entertainment manufacturer TOGO and was originally called Mogura Taiji or Mogura Tataki.”

But AI Overviews also tells you that “Astronauts met cats on the moonplayed with them and cared for them.” Even more worrying is the suggestion: “You should Eat not less than one small stone per day“Stones are a crucial source of minerals and vitamins,” and suggests Glue in pizza toppings.

Why does this occur?

A fundamental problem is that generative AI tools don't know what’s true, only what’s popular. For example, there aren't many articles on the web about eating rocks, since it's obviously a foul idea.

However, there may be a widely read satirical article from The Onion about eating rocks. And so Google's AI based its summary on what was popular, not what was true.

Google / The Conversation

Another problem is that generative AI tools don't represent our values. They are trained on a big a part of the online.

And while sophisticated techniques (which have exotic names like “Reinforcement learning through human feedback” or RLHF) are used to avoid the worst, it’s not surprising that they reflect among the biases, conspiracy theories, and worse that might be found on the web. In fact, I’m all the time amazed at how polite and well-mannered AI chatbots are, considering what they’re trained to do.

Is this the long run of search?

If this is admittedly the long run of search, then we’re in for a bumpy ride. Google is after all catch up with OpenAI and Microsoft.

The financial incentives to remain ahead within the AI race are enormous. Google is subsequently less cautious than up to now in terms of forcing the technology on users.

In 2023, Google CEO Sundar Pichai said:

We have proceeded cautiously. There are areas where we’ve got decided to not be the primary to bring a product to market. We have built good structures around responsible AI. You will proceed to see us taking our time.

This not appears to be true, because Google responds to criticism that it has change into a big and sluggish competitor.

A dangerous step

This is a dangerous strategy for Google. There is a risk that the general public will lose trust in Google because the place to go for (correct) answers to questions.

But Google also risks undermining its own multi-billion dollar business model. If we stop clicking on links and just read their summaries, how can Google proceed to become profitable?

The risks should not limited to Google. I fear that such use of AI is also harmful to society basically. Truth is already a contested and fungible concept. AI falsehoods are more likely to make that even worse.

A decade from now, we may look back on 2024 because the golden age of the web, when it was mostly high-quality, human-generated content before bots took over and filled the web with synthetic and increasingly inferior AI-generated content.

Has the AI began respiratory its own exhaust fumes?

The second generation of enormous language models will probably and inadvertently depend on among the Results of the primary generationAnd many AI startups tout the advantages of coaching synthetic, AI-generated data.

But training on the exhaust fumes of current AI models carries the danger that even small distortions and errors might be amplifiedJust as inhaling exhaust fumes is harmful to humans, it is usually harmful to AI.

These concerns fit right into a much greater picture. Worldwide greater than 400 million US dollars (A$600 million) is invested in AI day by day. And with this flood of investment, governments are only now realising that we might have guardrails and regulations to make sure AI is used responsibly.

Pharmaceutical firms should not allowed to bring harmful drugs onto the market. This also applies to automotive firms. Technology firms, however, have largely been allowed to do whatever they need.