At the tip of May 2024, several media outlets under the leadership of the journalist network Forbidden Stories published a series of reports entitled “Rwanda classifieds“This reporting provided detailed evidence related to the suspicious death of Rwandan journalist and government critic John Williams was born.

The reports provide additional details about Kigali's efforts to silence critics.

As a Political scientist With a background in researching digital disinformation and African politics, I work with the Center for Media Forensicsthat searches the web for evidence of coordinated influence operations. Following the publication of Rwanda Classified, we identified At least 464 accounts flooded online discussions concerning the report with content supporting Paul Kagame’s regime.

Many of the accounts we linked to this network have been lively on X/Twitter since January 2024. During this time, the network produced over 650,000 messages.

Rwandans will vote on July 15, 2024The consequence of the presidential election is a foregone conclusion, not least due to Exclusion of opposition candidates, Harassment of journalists And Murder of criticsIn the last elections in 2017, Kagame received over 98% of the votes.

Although the consequence is inevitable, accounts on the network have been hijacked to advertise Kagame's candidacy online. The fake posts are prone to be used as evidence of the president's popularity and the legitimacy of the election.

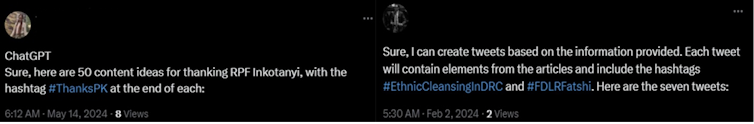

In each our response to Rwanda Classified and the pro-Kagame presidential campaign, we found AI tools getting used to disrupt online discussions and promote government narratives. The Large Language Model ChatGPT was one in all the tools deployed.

The coordinated use of those tools is worrying. It is an indication that the methods used to govern perception and maintain power have gotten increasingly sophisticated. Generative AI enables networks to supply a better volume of various content in comparison with campaigns run solely by humans.

In this case, the consistency of posting patterns and content tagging made it easier to detect the network. Future campaigns will likely refine these techniques, making it harder to detect inauthentic discussions.

African researchers, policymakers and residents need to pay attention to the potential challenges of using generative AI within the production of regional propaganda.

Influence networks

Coordinated influence operations have develop into commonplace in African digital spaces. While each network is different, all of them aim to make inauthentic content look real.

These operations often “promote” material that aligns with their interests and try to “devalue” other discussions by flooding them with unrelated content. This appears to be the case with Network we identified.

In East Africa alone, social media networks have removed accounts that were designed to look legitimate and that were Ugandan, Tanzanian And Ethiopian Citizens with false and biased political information.

Non-state actors, including several global PR firms, have also been blamed for operating bots and web sites in South Africa And Rwanda.

Most of those previous influence networks were identified by their use of “copy-pasta,” which is using verbatim text from a central source that’s utilized in multiple accounts.

Unlike these previous campaigns, the pro-Kagame network we identified rarely copied text word for word. Instead, the associated accounts used ChatGPT to create content with similar, but not an identical, themes and goals. They then posted the content together with a series of hashtags.

The work was sloppy, probably as a consequence of the inexperience of the actors involved within the campaign. Errors in text generation allowed us to trace down connected accounts. For example, in certain messages, connected accounts inserted instructions on tips on how to create pro-Kagame propaganda.

These messages were then used to flood legitimate discussions with either unrelated or pro-government content. This included details about Rwandans’ ties to sports clubsAnd direct experiments to discredit reporters and media involved within the Rwanda Classified investigation.

In recent weeks, several accounts within the coordinated network have been spreading election-related hashtags equivalent to #ToraKagame2024 (which implies “vote”). Due to the massive variety of posts the network generates, interested readers are prone to come across content that appears like uncritical support for the country and its leader.

Author provided the next

AI and Propaganda

Integrating AI tools into online campaigns has the potential to rework the impact of propaganda for several reasons.

Scope and efficiency: AI tools enable the rapid production of huge volumes of content. Producing these results without these tools would require more resources, people and time.

Unlimited reach: Techniques equivalent to automatic translation allow actors to influence discussions across borders. For example, probably the most common goal of the inauthentic Rwandan network was the conflict in eastern Democratic Republic of Congo.

Network mapping: Certain behavioral patterns can indicate alignment. Generative AI tools allow for the seamless creation of subtle variations in text that make attribution difficult.

What will be done

Since residents are the first goal of most influence operations, they need to be prepared to administer the evolution of those efforts. Governments, NGOs, and educators should consider expanding digital literacy programs to make it easier to take care of digital threats.

Improved communication can also be needed between operators of social media platforms like X/Twitter and providers of huge language model services like OpenAI, each of which help influence networks to flourish. When inauthentic activity will be attributed to specific actors, operators should consider: temporary bans or complete exclusions.

Finally, governments should work to lift the prices of improper use of AI tools. Without real consequences, equivalent to aid restrictions or targeted sanctions, self-interested actors will proceed to experiment with increasingly powerful AI tools with impunity.