Brain-computer interfaces are a groundbreaking technology that might help paralyzed people regain lost functions, equivalent to the movement of a hand. These devices record signals from the brain and decode the user's intended motion, bypassing damaged or damaged nerves that may normally transmit these brain signals to the controlling muscles.

Since 2006Demonstrations of brain-computer interfaces on humans have primarily focused on restoring arm and hand movements by enabling people to Controlling the pc cursor or Robotic armsRecently, researchers have begun Language Brain-Computer Interfaces to revive communication for individuals who cannot speak.

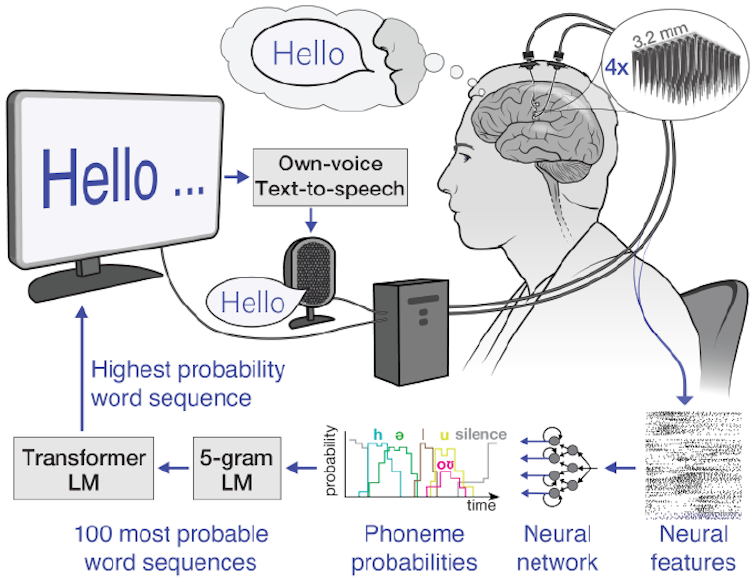

As the user attempts to talk, these brain-computer interfaces record the person's individual brain signals related to the attempted muscle movements for speaking after which translate them into words. These words can then be displayed as text on a screen or spoken aloud using text-to-speech software.

I’m a Researchers within the Neuroprosthetics Laboratory on the University of California, Davis, which is an element of the BrainGate2 clinical trial. My colleagues and I recently demonstrated a speech-brain-computer interface that deciphers the speech attempts of an ALS patient, or amyotrophic lateral sclerosis, also often known as Lou Gehrig's disease. The interface converts neural signals into text with over 97% accuracy. The key to our system is a set of artificial intelligence-based language models – artificial neural networks that help interpret natural language models.

Recording brain signals

The first step of our brain-computer voice interface is to record brain signals. There are multiple sources of brain signals, a few of which require surgery to record. Surgically implanted recording devices can capture brain signals in prime quality because they’re placed closer to the neurons, leading to stronger signals with less interference. These neural recording devices include grids of electrodes placed on the surface of the brain or electrodes implanted directly into the brain tissue.

In our study, we used electrode arrays surgically inserted into participant Casey Harrell's motor cortex, the a part of the brain that controls speech-related muscles. While Harrell attempted to talk, we recorded neural activity from 256 electrodes.

UC Davis Health

Decoding brain signals

The next challenge is to attach the complex brain signals with the words the user desires to say.

One approach is to map neural activity patterns on to spoken words. This method requires recording the brain signals corresponding to every word multiple times to find out the common relationship between neural activity and specific words. While this strategy works well for small vocabularies, as in a 2021 study with a 50-word vocabularyit becomes impractical for larger ones. Imagine asking the brain-computer interface user to say every word within the dictionary multiple times – this might take months and it still wouldn't work for brand spanking new words.

Instead, we use another strategy: we map brain signals to phonemes, the fundamental units of sounds that make up words. There are 39 phonemes in English, including ch, er, oo, pl, and sh, which might be combined to form any word. We can measure the neural activity related to each phoneme multiple times by simply asking the participant to read a number of sentences out loud. By accurately mapping neural activity to phonemes, we will assemble it into any English word, even ones that the system has not been explicitly trained on.

To map brain signals to phonemes, we use advanced machine learning models. These models are particularly well-suited to this task because they can find patterns in large amounts of complex data that may be unimaginable for humans to detect. Think of those models as super-intelligent listeners that may filter out vital information from noisy brain signals, just like how you would possibly give attention to a conversation in a crowded room. Using these models, we were in a position to decipher phoneme sequences during a speech trial with over 90% accuracy.

From phonemes to words

Once we’ve the decoded phoneme sequences, we’d like to convert them into words and sentences. This is difficult, especially when the decoded phoneme sequence is just not entirely accurate. To solve this puzzle, we use two complementary kinds of machine learning language models.

The first are N-gram language models, which predict which word is most probably to follow a series of words. We trained a 5-gram or five-word language model on Millions of sentences to predict the probability of a word based on the previous 4 words, taking into consideration local context and customary expressions. For example, after “I'm superb,” “today” is likely to be suggested as more likely than “potato.” Using this model, we transform our phoneme sequences into the 100 most probably word sequences, each of which is assigned a probability.

The second is large language models, which power AI chatbots and likewise predict which words are most probably to follow others. We use large language models to refine our selections. These models, trained on large amounts of diverse text, have a broader understanding of language structure and meaning. They help us determine which of our 100 candidate sentences makes probably the most sense in a broader context.

By fastidiously weighing the chances from the n-gram model, the big language model, and our initial phoneme predictions, we will make a really educated guess about what the brain-computer interface user desires to say. This multi-step process allows us to beat the uncertainties of phoneme decoding and produce coherent, contextually appropriate sentences.

UC Davis Health

Advantages in practice

In practice, this speech decoding strategy has proven remarkably successful. We have enabled Casey Harrell, a person with ALS, to “speak” using only his thoughts with over 97% accuracy. Thanks to this breakthrough, he can now easily converse along with his family and friends for the primary time in years, all from the comfort of his own residence.

Speech-brain-computer interfaces represent a major advance in restoring communication. As we proceed to refine these devices, they hold the promise of giving a voice to individuals who have lost their ability to talk and reconnecting them with their family members and the world around them.

However, challenges remain, equivalent to developing a more accessible, portable and sturdy technology for years to return. Despite these hurdles, speech-brain-computer interfaces are a robust example of how science and technology can come together to unravel complex problems and dramatically improve people's lives.