If your jaw dropped while watching the most recent AI-generated video, your account balance was protected against criminals by a fraud detection system, or your day was made a little bit easier because you possibly can dictate a text message on the go, then that's the case with many scientists, mathematicians, and Thanks to engineers.

But two names stand out as fundamental contributions to the deep learning technology that makes these experiences possible: physicists at Princeton University John Hopfield and computer scientist from the University of Toronto Geoffrey Hinton.

The two researchers were awarded the Nobel Prize in Physics on Oct. 8, 2024 for her pioneering work in the sphere of artificial neural networks. Although artificial neural networks are modeled on biological neural networks, each researchers' work was based on statistical physics, which is why the prize was awarded in physics.

Atila Altuntas/Anadolu via Getty Images

How a neuron calculates

Artificial neural networks owe their origins to the study of biological neurons in living brains. In 1943, neurophysiologist Warren McCulloch and logician Walter Pitts proposed one easy model of how a neuron works. In the McCulloch-Pitts model, a neuron is connected to its neighboring neurons and might receive signals from them. It can then mix these signals to send signals to other neurons.

But there's a twist: signals coming from different neighbors may be weighted in a different way. Imagine you're considering buying a brand new best-selling phone. You refer to your folks and ask them for his or her recommendations. A straightforward strategy is to gather all recommendations from friends and judge to follow what the bulk say. For example, you ask three friends, Alice, Bob and Charlie, and so they say yes, yes and no. This leads you to the choice to purchase the phone as you might have two yes and one no votes.

However, it’s possible you’ll trust some friends more because they’ve in-depth knowledge of technological devices. So possibly you select to offer more weight to their recommendations. For example, if Charlie may be very knowledgeable, you may count his no 3 times and now you select not to purchase the phone – two yeses and three nos. If you're unlucky enough to have a friend who you completely distrust in the case of technical matters, you may even assign them a negative weight. So your “yes” is taken into account a “no” and your “no” is taken into account a “yes.”

Once you've decided for yourself whether the brand new phone is a very good alternative, other friends can ask you on your suggestion. Likewise, neurons in artificial and biological neural networks can collect signals from their neighbors and send a signal to other neurons. This ability results in a vital distinction: Is there a cycle within the network? For example, if I ask Alice, Bob and Charlie today and tomorrow Alice asks me for my suggestion, then there’s a cycle: from Alice to me and from me back to Alice.

Zawersh/Wikimedia, CC BY-SA

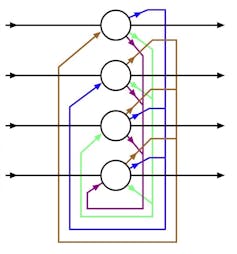

When the connections between neurons do not need a cycle, computer scientists speak of a feedforward neural network. The neurons in a feedforward network may be arranged in layers. The first layer consists of the inputs. The second layer receives its signals from the primary layer and so forth. The last layer represents the outputs of the network.

However, when there’s a cycle within the network, computer scientists call it a recurrent neural network, and the arrangement of neurons may be more complicated than in feedforward neural networks.

Hopfield network

The initial inspiration for artificial neural networks got here from biology, but other fields soon began to shape their development. These included logic, mathematics and physics. Physicist John Hopfield used ideas from physics to check a selected thing Type of recurrent neural networknow called the Hopfield network. In particular, he examined their dynamics: What happens to the network over time?

Such dynamics are also necessary when information spreads via social networks. Everyone is conversant in the viral spread of memes and the formation of echo chambers in online social networks. These are all collective phenomena that ultimately arise from the easy exchange of knowledge between people within the network.

Hopfield was a pioneer in with models from physicsparticularly those designed to check magnetism to grasp the dynamics of recurrent neural networks. He also showed that their dynamic can do it Give such neural networks a type of memory.

Boltzmann machines and backpropagation

In the Nineteen Eighties, Geoffrey Hinton, computational neurobiologist Terrence Sejnowski, and others expanded Hopfield's ideas to create a brand new class of models called ” Boltzmann machinesnamed after the nineteenth century physicist Ludwig Boltzmann. As the name suggests, the design of those models is predicated on statistical physics developed by Boltzmann. Unlike Hopfield networks, which could store patterns and proper errors in patterns – like a spell checker does – Boltzmann machines could generate recent patterns, laying the inspiration for the trendy generative AI revolution.

Hinton was also a part of one other breakthrough that occurred within the Nineteen Eighties: Backpropagation. If artificial neural networks are to tackle interesting tasks, one must one way or the other select the correct weights for the connections between artificial neurons. Backpropagation is a key algorithm that enables weights to be chosen based on the network's performance on a training data set. However, training artificial neural networks with many layers remained a challenge.

In the 2000s, Hinton and his staff took a clever approach used Boltzmann machines to coach multilayer networks by first pre-training the network layer by layer after which using one other fine-tuning algorithm on the pre-trained network to further adjust the weights. Multilayer networks were renamed deep networks and the deep learning revolution had begun.

AI pays back physics

The Nobel Prize in Physics shows how ideas from physics contributed to the rise of deep learning. Now deep learning has begun to make a difference in physics, enabling accurate and rapid simulations of systems starting from molecules and materials to Earth's entire climate.

In awarding the Nobel Prize in Physics to Hopfield and Hinton, the Prize Committee expressed its hope in humanity's potential to make use of these advances to advance human well-being and construct a sustainable world.