The introduction of AI systems called Large Language Models (LLMs), akin to OpenAI's ChatGPT chatbot, has been heralded as the beginning of a brand new technological era. And they’ll even have a major impact on the way in which we live and work in the longer term.

But they didn't appear out of nowhere and have a for much longer history than most individuals realize. In fact, most of us have been using the approaches they’re based on in our existing technology for years.

LLMs are a special kind of language model, which is a mathematical representation of language based on probabilities. If you've ever used predictive text on a cellular phone or asked an issue to a wise speaker, you then've almost definitely already used a voice model. But what do they really do and what does it take to make one?

Language models are intended to estimate how likely it’s to see a certain sequence of words. This is where probabilities come into play. For example, a great language model for English would assign a high probability to a well-formed sentence like “The old black cat was fast asleep” and a low probability to a random sequence of words like “Library a” or the quantum “some”.

Most language models can even reverse this process to provide plausible-looking text. Text recognition in your smartphone uses language models to predict how you ought to complete text as you type.

The earliest approach to constructing language models was Described in 1951 from Claude Shannona researcher working for IBM. His approach was based on sequences of words often called n-gram – say “old black man” or “cat slept soundly”. The probability of n-grams appearing within the text was estimated by looking for examples in existing documents. These mathematical probabilities were then combined to calculate the general probability of longer word sequences, akin to complete sentences.

Estimating n-gram probabilities becomes tougher the longer the n-gram gets. Therefore, it’s far more difficult to estimate accurate probabilities for 4-grams (four-word sequences) than for bi-grams (two-word sequences). Consequently, early language models of this sort were often based on short n-grams.

However, this meant that they often found it difficult to point out the connection between words that appeared far apart. This could result to start with and end of a sentence not matching if the language model was used to generate a sentence.

Pressmaster/Shutterstock

To avoid this problem, researchers created language models based on neural networks – AI systems that mimic the way in which the human brain works. These language models are in a position to represent connections between words that might not be close to one another. Neural networks depend on a lot of numerical values (called parameters) to know these relationships between words. For the model to work well, these parameters have to be set accurately.

The neural network learns the corresponding values for these parameters by a lot of sample documents, much like how n-gram probabilities are learned by n-gram language models. Included “Training” processThe neural network scans the training documents and learns to predict the following word based on the previous ones.

These models work well, but have some disadvantages. Although the neural network is theoretically able to representing connections between words that occur distant from one another, in practice more importance is given to words which can be closer together.

More importantly, words within the training documents have to be processed sequentially to learn appropriate values for the network parameters. This limits the speed at which the network may be trained.

The starting of the transformers

A brand new kind of neural network, is named a transformerWas Introduced in 2017 and avoided these problems by processing all of the words within the input at the identical time. This allowed them to be trained in parallel, meaning the needed calculations may very well be distributed across multiple computers and performed concurrently.

A side effect of this modification is that it allowed Transformers to be trained on significantly more documents than was possible with previous approaches, thereby creating larger language models.

Transformers also learn from text examples, but can be trained to resolve a broader range of problems than simply predicting the following word. One of them is a form of gap-filling problem where some words within the training text have been removed. The goal is to guess which words are missing.

Another problem is that the transformer is given a pair of sentences and is meant to come to a decision whether the second should follow the primary. Training on problems like these makes Transformers more flexible and powerful than previous language models.

Ascannius

The use of transformers has enabled the event of contemporary large language models. They are called large partially because they’re trained on significantly more text examples than previous models.

Some of those AI models are trained on over a trillion words. To read that much would take an adult greater than 7,600 years at a mean reading speed. These models are also based on very large neural networks, some with greater than 100 billion parameters.

In recent years, a further component has been added to large language models that permits users to interact with them through prompts. These prompts may be questions or instructions.

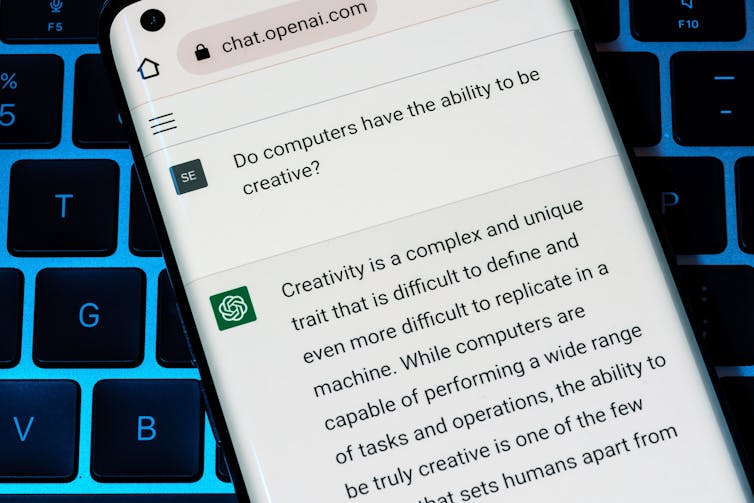

This has enabled the event of generative AI systems akin to ChatGPT, Google's Gemini and Meta's Llama. Models learn to reply to the prompts through a process called Reinforcement learningThis is comparable to the way in which computers are taught to play games like chess.

Humans provide prompts to the language model, and human feedback on the answers generated by the AI model is utilized by the model's learning algorithm to guide further output. Generating all of those questions and evaluating the answers requires a whole lot of human input, which may be costly to acquire.

One technique to reduce this effort is to make use of a language model to create examples to simulate human-AI interaction. This AI-generated feedback is then used to coach the system.

However, constructing a big language model continues to be an expensive undertaking. Estimates put the associated fee of coaching some newer models within the a whole bunch of thousands and thousands of dollars. There are also environmental costs, because the carbon dioxide emissions related to the creation of LLMs are estimated to be akin to the worth of several transatlantic flights.

These are things we want to seek out solutions for within the midst of an AI revolution that shows no signs of slowing down for now.