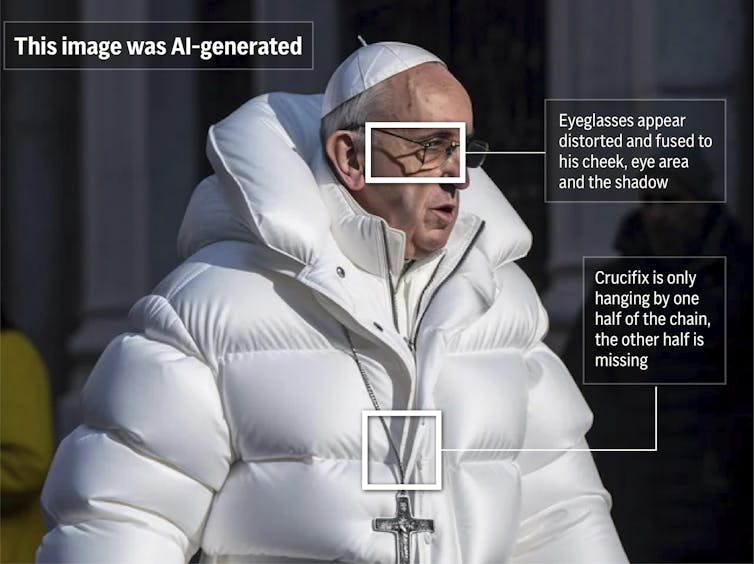

In the age of generative artificial intelligence (Genai), the expression “I’ll imagine it if I see it” is not any longer. Genai can’t only create manipulated representations of individuals, but can be used to create completely fictional people and scenarios.

Genai tools are reasonably priced and accessible to everyone, and AI-generated images turn out to be omnipresent. If you’ve gotten tousled your news or Instagram feeds, you’ve gotten driven the probability in an AI-generated picture without realizing it.

As a pc science researcher and doctoral student on the University of Waterloo, I’m increasingly concerned about my very own inability to acknowledge what’s real, which is generated from AI.

My research team carried out a survey wherein almost 300 participants were asked Classify a lot of pictures as real or fallacious. The average classification accuracy of the participants in 2022 was 61 percent. The participants classified real images more accurately than fake. It is probably going that the accuracy is way lower today because of the quickly improved gena technology.

We also analyzed your answers with the assistance of text mining and keyword extraction to learn the common reasons that participants are provided for his or her classifications. It was immediately obvious that in an image generated, the eyes of an individual were thought to be a accomplished indicator that the image was probably generated with AI. Ai also tried to supply realistic teeth, ears and hair.

But these tools are consistently improving. The treacherous signs that we could use to discover pictures with AI-generated pictures are not any longer reliable.

Improve pictures

The researchers began to look at the usage of goose for image and video synthesis in 2014. The pioneering paper “Generative controversial networks” introduced the controversial strategy of goose. Although deeper is just not mentioned on this paper, it was the springboard for Deepfakes in Gan.

Some early examples of gena art that goose used “Deepdream” pictures Created by Google Engineer Alexander Mordvintsev in 2015.

But in 2017 the term “deep pake” was officially born after a Reddit user whose username was “deeper” to make use of goose Generate synthetic celebrity pornography.

The Canadian press/Ryan Remiorz

In 2019, the software engineer Philip Wang created die “This Website on which goose generates realistically looking pictures of individuals. In the identical yr the publication of the Deepfake Detection ChallengeThe latest and improved Deeppake models were on the lookout for widespread attention and led to the rise of Deepfakes.

About a decade later, considered one of the authors of the paper “Generative opponent Netze” – the Canadian computer scientist Yoshua Bengio – began his concerns concerning the need to manage AI

Bengio and other AI TrailBlazer signed an open letter in 2024. Request a greater Deeppake regulation. He also led the primary one International AI security reportwhich was published in early 2025.

Hao LiDeepfake Pioneer and considered one of the world's leading Deepfak artists, scary to Robert Oppenheimer's famous quote “Now, I’m Death”:

“It develops faster than I believed. Soon it would come to some extent where we now have no way of recognizing” deeper “, so we now have to take a look at other varieties of solutions.”

The latest disinformation

In fact, large technology corporations have encouraged the event of algorithms that may recognize Deepfakes. These algorithms Frequently seek for the next signs to find out whether content is a deep pake:

- Number of spoken words per set or the language rate (the common human language rate is 120-150 words per minute),

- Facial expressions, based on well -known coordinates of human eyes, eyebrows, nose, lips, teeth and facial contours,

- Reflections within the eyes which can be somewhat not convincing (either missing or simplified),

- Image saturationWith AI-generated images are less saturated and incorporates a smaller variety of sub-slip pixels in comparison with pictures taken by an HDR camera.

(The Associated Press)

But even these traditional deep pawn -recognition algorithms suffer several disadvantages. They are frequently trained on high -resolution images, in order that they might not recognize low -resolution surveillance material or if the topic is poorly illuminated or posed in an unrestricted manner.

Despite thin and inappropriate Attempts to manageRogue players proceed to make use of Deepfakes and text-to-image AI synthesis for shameful purposes. The consequences of this non -regulated usage range range from political destabilization at national and global level to the Destruction of reputations by very personal attacks.

Disinformation is just not latest, however the distribution modes consistently change. Deeppakes can’t only be used to spread disinformation – ie to ascertain that something fallacious is true – but additionally to generate plausible denial and to find out that something true is fallacious.

One can actually say that seeing in today's world won’t ever imagine again. What might have been irrefutable might be a picture of ai-generated picture.