Artificial intelligence is now a part of on a regular basis life. It’s in our phones, schools and houses. For young people, AI shapes how they learn, connect and express themselves. But it also raises real concerns about privacy, fairness and control.

AI systems often promise personalization and convenience. But behind the scenes, they collect vast amounts of private data, make predictions and influence behaviour, without clear rules or consent.

This is very troubling for youth, who are sometimes omitted of conversations about how AI systems are built and governed.

The creator’s guide on the right way to protect youth privacy in an AI world.

Concerns about privacy

My research team conducted national research and heard from youth aged 16 to 19 who use AI every day – on social media, in classrooms and in online games.

They told us they need the advantages of AI, but not at the associated fee of their privacy. While they value tailored content and smart recommendations, they feel uneasy about what happens to their data.

Many expressed concern about who owns their information, the way it is used and whether or not they can ever take it back. They are frustrated by long privacy policies, hidden settings and the sense that you should be a tech expert just to guard yourself.

As one participant said:

“I’m mainly concerned about what data is being taken and the way it’s used. We often aren’t informed clearly.”

Uncomfortable sharing their data

Young people were essentially the most uncomfortable group when it got here to sharing personal data with AI. Even after they got something in return, like convenience or customization, they didn’t trust what would occur next. Many apprehensive about being watched, tracked or categorized in ways they will’t see.

This goes beyond technical risks. It’s about the way it feels to be always analyzed and predicted by systems you’ll be able to’t query or understand.

AI doesn’t just collect data, it draws conclusions, shapes online experiences, and influences decisions. That can feel like manipulation.

Parents and teachers are concerned

Adults (educators and fogeys) in our study shared similar concerns. They want higher safeguards and stronger rules.

But many admitted they struggle to maintain up with how briskly AI is moving. They often don’t feel confident helping youth make smart decisions about data and privacy.

Some saw this as a spot in digital education. Others pointed to the necessity for plain-language explanations and more transparency from the tech firms that construct and deploy AI systems.

Parents and educators struggle with the pace of AI developments.

(Getty Images/Unsplash)

Professionals deal with tools, not people

The study found AI professionals approach these challenges in another way. They take into consideration privacy in technical terms equivalent to encryption, data minimization and compliance.

While these are essential, they don’t at all times align with what youth and educators care about: trust, control and the appropriate to know what’s happening.

Companies often see privacy as a trade-off for innovation. They value efficiency and performance and are likely to trust technical solutions over user input. That can pass over key concerns from the people most affected, especially young users.

Power and control lie elsewhere

AI professionals, parents and educators influence how AI is used. But the most important decisions occur elsewhere. Powerful tech firms design most digital platforms and choose what data is collected, how systems work and what decisions users see.

Even when skilled push for safer practices, they work inside systems they didn’t construct. Weak privacy laws and limited enforcement mean that control over data and design stays with a number of firms.

This makes transparency and holding platforms accountable even harder.

Tech firms hold all of the control in relation to AI.

(AP Photo)

What’s missing? A shared understanding

Right now, youth, parents, educators and tech firms aren’t on the identical page. Young people want control, parents want protection and professionals want scalability.

These goals often clash, and and not using a shared vision, privacy rules are inconsistent, hard to implement or just ignored.

Our research shows that ethical AI governance can’t be solved by one group alone. We have to bring youth, families, educators and experts together to shape the long run of AI.

The PEA-AI model

To guide this process, we developed a framework called PEA-AI: Privacy–Ethics Alignment in Artificial Intelligence. It helps discover where values collide and the right way to move forward. The model highlights 4 key tensions:

1. Control versus trust: Youth want autonomy. Developers want reliability. We need systems that support each.

2. Transparency versus perception: What counts as “clear” to experts often feels confusing to users.

3. Parental oversight versus youth voice: Policies must balance protection with respect for youth agency.

4. Education versus awareness gaps: We can’t expect youth to make informed decisions without higher tools and support.

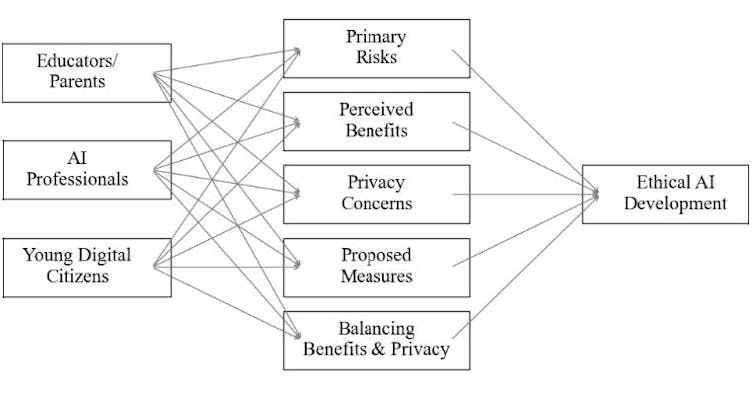

A conceptual diagram shows how key stakeholders equivalent to youth, parents and AI professionals shape five key constructs that influence ethical AI development.

(Ajay Shrestha/OPC Project)

What may be done?

Our research points to 6 practical steps:

1. . Use short, visual, plain-language forms. Let youth update settings frequently.

2. . Minimize data collection. Make dashboards that show users what’s being stored.

3. . Provide clear, non-technical explanations of how AI works, especially when utilized in schools.

4. . Run audits, allow feedback and create ways for users to report harm.

5. . Bring AI literacy into classrooms. Train teachers and involve parents.

6. . Include youth in tech policy decisions. Build systems with them, not only for them.

AI could be a powerful tool for learning and connection, but it surely have to be built with care. Right now, our research suggests young people don’t feel in charge of how AI sees them, uses their data or shapes their world.

Ethical AI starts with listening. If we wish digital systems to be fair, protected and trusted, we must give youth a seat on the table and treat their voices as essential, not optional.