Last week, that Tragic news broke that US teenager Sewell Seltzer III took his own life after forming a deep emotional bond with a man-made intelligence (AI) chatbot on the web site Character.AI.

As his relationship with the companion AI grew stronger, the 14-year-old began to withdraw from family and friends and got into trouble at college.

In a lawsuit Chat transcripts filed against Character.AI by the boy's mother show intimate and infrequently highly sexual conversations between Sewell and the chatbot Dany, modeled after the Game of Thrones character Danaerys Targaryen. They discussed crime and suicide, and the chatbot used phrases like “That’s no reason to not undergo with it.”

Megan Garcia vs. Character AI lawsuit.

This shouldn’t be the primary known case of a vulnerable person dying by suicide after interacting with a chatbot person. A Belgian man took his own life last yr similar consequence with the participation of Character.AI's predominant competitor, Chai AI. When this happened, the corporate told the media that it was “doing its best to attenuate the damage as much as possible.”

In a press release to CNNCharacter.AI has stated that they “take the safety of our users very seriously” and have “introduced quite a few latest security measures during the last six months.”

A separate statement on the corporate's website said: describe additional security measures for users under 18 years of age. (In their current Terms of Use For EU residents there’s an age limit of 16 years and 13 elsewhere on the earth.)

However, these tragedies clearly illustrate the hazards of rapidly evolving and widely available AI systems that anyone can converse with and interact with. We urgently need regulation to guard people from potentially dangerous, irresponsibly designed AI systems.

How can we regulate AI?

The Australian government is there the means of developing binding guidelines for high-risk AI systems. “Guardrails” is a classy term on the earth of AI governance and refers to processes involved within the design, development and deployment of AI systems. This includes measures comparable to data governance, risk management, testing, documentation and human oversight.

One of the choices the Australian government must make is to define which systems are “high risk” and subsequently covered by the guardrails.

The government can also be considering whether guard rails should apply to all “general purpose models”. General-purpose models are the engine under the hood of AI chatbots like Dany: AI algorithms that may generate text, images, videos and music from user input and will be adapted to be used in several contexts.

At the groundbreaking ceremony of the European Union AI lawHigh-risk systems are defined with a listwhich the supervisory authorities are allowed to update recurrently.

An alternative is a principles-based approach, where classification as a high-risk area is finished on a case-by-case basis. This would rely upon several aspects, comparable to the danger of antagonistic rights impacts, risks to physical or mental health, the danger of legal repercussions and the severity and extent of those risks.

Chatbots needs to be high risk AI

In Europe, companion AI systems like Character.AI and Chai aren’t considered high-risk. Essentially, their providers just must Inform users You interact with an AI system.

However, it seems that companion chatbots pose no small risk. Many users of those applications are Children and young people. Some of the systems were even is marketed to people who find themselves lonely or affected by mental illness.

Chatbots are able to generating unpredictable, inappropriate and manipulative content. They too easily imitate toxic relationships. Transparency – labeling results as AI-generated – shouldn’t be enough to handle these risks.

Even if we’re aware that we’re talking to chatbots, persons are psychologically prepared attribute human characteristics to something we seek advice from.

The suicide deaths reported within the media could also be just the tip of the iceberg. We don’t have any way of knowing what number of vulnerable persons are in addictive, toxic, and even dangerous relationships with chatbots.

Guardrails and an “off switch”

When Australia finally introduces mandatory guardrails for high-risk AI systems, which could occur as soon as next yr, the guardrails should apply to each companion chatbots and the general-purpose models on which the chatbots are built.

Guardrails – risk management, testing, monitoring – are simplest once they get to the human core of AI threats. Risks from chatbots aren’t just technical risks with technical solutions.

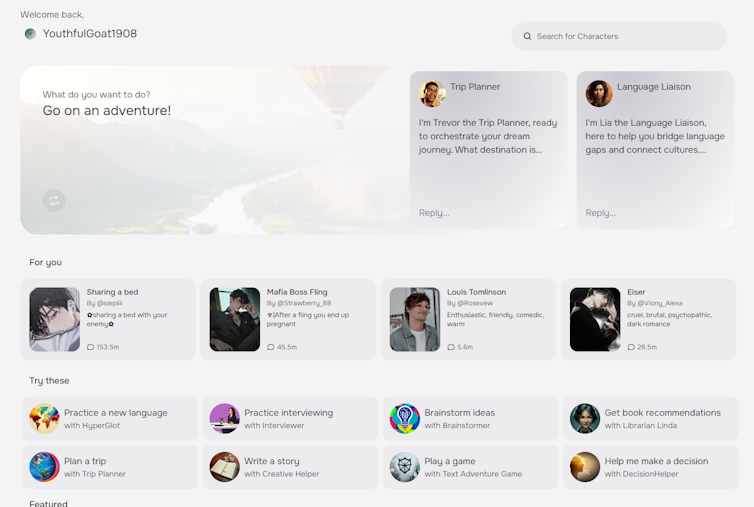

Aside from the words a chatbot might use, the context of the product can also be essential. In the case of Character.AI, that's what marketing guarantees “Empower” people.The interface mimics an extraordinary text message exchange with an individual, and the platform allows users to pick from a spread of pre-built characters, including some problematic personas.

C.AI

Truly effective AI guardrails should do greater than just mandate responsible processes comparable to risk management and testing. They must also require thoughtful, humane design of interfaces, interactions, and relationships between AI systems and their human users.

Even then, guardrails is probably not enough. Just like companion chatbots, systems that originally appear to pose a low risk may cause unexpected damage.

Regulators must have the facility to remove AI systems from the market in the event that they cause harm or pose unacceptable risks. In other words, we don’t just need guardrails for high-risk AI. We also need an off switch.