Adobe says it’s constructing an AI model to generate videos. However, it doesn't reveal when exactly this model will launch – or much about it, aside from the undeniable fact that it exists.

Adobe's model – a part of the corporate's growing Firefly family of generative AI products – is obtainable as a type of answer to OpenAI's Sora, Google's Imagen 2 and models from the growing variety of startups within the emerging generative AI video space Premiere Pro , Adobe's flagship video editing program, will probably be released sometime later this yr, Adobe says.

Like a lot of today's generative AI video tools, Adobe's model creates footage from scratch (either a prompt or reference images) – and it enables three recent features in Premiere Pro: adding objects, removing objects, and generative expansion.

They're pretty self-explanatory.

Adding objects allows users to pick a segment of a video clip—for instance, the highest third or bottom left corner—and prompt to insert objects into that segment. In a briefing with TechCrunch, an Adobe spokesperson showed a still image of an actual briefcase stuffed with diamonds generated by the Adobe model.

Photo credit: AI-generated diamonds, courtesy of Adobe.

Object removal removes objects from clips, akin to: B. Boom microphones etc Coffee cups within the background of a shot.

Remove objects with AI. Note that the outcomes are usually not entirely perfect. Photo credit: Adobe

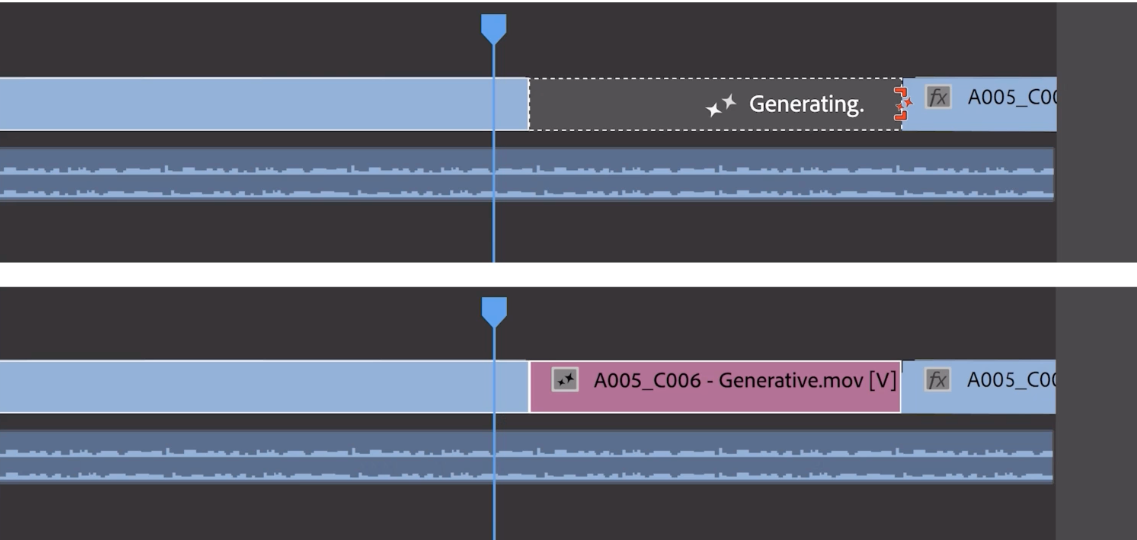

With generative expansion, a number of frames are added to the start or end of a clip (unfortunately, Adobe doesn’t provide information in regards to the variety of frames). Generative Augmentation just isn’t intended to create entire scenes, but so as to add buffer frames to sync with a soundtrack or hold a shot for an additional beat – for instance, so as to add emotional power.

Photo credit: Adobe

To counteract the fear of deepfakes that inevitably arise with generative AI tools like these, Adobe says it’s bringing content credentials – metadata for identifying AI-generated media – to Premiere. Content Credentials, a media provenance standard that Adobe supports as a part of its Content Authenticity Initiative, was already included in Photoshop and a component of Adobe's Firefly image-generating models. In Premiere, they not only show which content was AI-generated, but additionally which AI model was used to generate it.

I asked Adobe what data – images, videos, etc. – was used to coach the model. The company wouldn't say how (or whether) it compensates contributors to the dataset.

Last week, Bloomberg quoted sources acquainted with the matter: reported that Adobe can pay photographers and artists on its stock media platform Adobe Stock as much as $120 to submit short video clips to coach its video generation model. Depending on the submission, compensation is anticipated to range from roughly $2.62 per minute of video to roughly $7.25 per minute, with higher quality footage commanding correspondingly higher prices.

That can be a departure from Adobe's current agreement with Adobe Stock artists and photographers, whose work it uses to coach its imaging models. The company pays these contributors an annual bonus, slightly than a one-time fee, depending on the quantity of content they’ve in stock and the way it’s used – even though it is a bonus governed by an opaque formula, not by Guaranteed yr after yr.

If Bloomberg's reporting is accurate, it represents an approach that stands in stark contrast to that of generative AI video competitors like OpenAI said to have evaluated publicly available web data – including videos from YouTube – to coach its models. YouTube CEO Neal Mohan recently said that using YouTube videos to coach OpenAI's text-to-video generator can be a violation of the platform's terms of service, highlighting the legal hurdle of fair use -Arguments from OpenAI and others.

Companies including OpenAI are Be have been sued over allegations that they violate mental property law by training their AI on copyrighted content without crediting or paying the owners. Adobe seems intent on avoiding this end, as do its sometime generative AI competitors Shutterstock and Getty Images (which even have deals in place to license model training data), and positioning itself – with its IP indemnity policy – as a demonstrably “protected” choice to position for corporate customers.

As for payment, Adobe isn't saying how much it would cost customers to make use of the upcoming video generation features in Premiere; Pricing might be still being negotiated. However, the corporate says the payment system will follow the generative credits system established on its early Firefly models.

For customers with a paid subscription to Adobe Creative Cloud, generative credits renew every month, with quotas starting from 25 to 1,000 per 30 days depending on the plan. More complex workloads (e.g. higher resolution generated images or multiple image generations) typically require more credits.

The big query for me is: Will Adobe's AI-powered video capabilities ultimately come at a price?

The previous Firefly image generation models were far mocked as disappointing and flawed in comparison with Midjourney, OpenAI's DALL-E 3 and other competing tools. The lack of a timeframe for releasing the video model doesn't exactly encourage confidence that the identical fate will probably be avoided. Also the undeniable fact that Adobe refused to point out me live demos of adding, removing, and generatively extending objects, as an alternative insisting on a pre-recorded sizzle reel.

Perhaps to hedge its bets, Adobe says it’s talking to 3rd parties about integrating their video generation models into Premiere as well, to support tools like Generative Extend and more.

One of those providers is OpenAI.

Adobe says it’s working with OpenAI to integrate Sora into the Premiere workflow. (An OpenAI connection is sensible given the variety of AI startups Overtures to Hollywood recently; Significantly, Mira Murati, CTO of OpenAI, will probably be attending the Cannes Film Festival this yr. Other early partners include Pika, a startup developing AI tools for generating and editing videos, and Runway, one in every of the primary to adopt a generative video model.

An Adobe spokesman said the corporate is open to future collaborations with others.

To be clear, these integrations are currently more of a thought experiment than a working product. Adobe has repeatedly emphasized to me that they’re in “early preview” and “research” and never that customers can expect to play with it any time soon.

And that, I might say, reflects the general tone of Adobe's generative video presser.

With these announcements, Adobe is trying to obviously signal that it’s serious about generative video, even when only in a preliminary sense. It can be silly to not – getting caught flat-footed within the generative AI race risks losing a priceless potential recent revenue stream, assuming the economics ultimately move in Adobe's favor. (AI models are expensive to coach, run and operate, in spite of everything.)

But what it shows – concepts – isn't particularly compelling, to be honest. With Sora on the rise and positively more innovations within the pipeline, the corporate still has lots to prove.