Google’s latest flagship I/O conference saw the corporate double down on its Search Generative Experience (SGE) – which is able to embed generative AI into Google Search.

SGE, which goals to bring AI-generated answers to over a billion users by the tip of 2024, relies on Gemini, Google’s family of enormous language models (LLMs), to generate human-like responses to look queries.

Instead of a standard Google search, which primarily displays links, you’ll be presented with an AI-generated summary alongside other results.

SGE embeds AI-generated text into search results.

This “AI Overview” has been criticized for providing nonsense information, and Google is fast working on solutions before it begins mass rollout.

But except for recommending adding glue on pizza and saying pythons are mammals, there’s one other bugbear with Google’s latest AI-driven search strategy: its environmental footprint.

While traditional search engines like google and yahoo simply retrieve existing information from the web, generative AI systems like SGE must create entirely latest content for every query. This process requires vastly more computational power and energy than conventional search methods.

It’s estimated that between 3 and 10 billion Google searches are conducted day by day. The impacts of applying AI to even a small percentage of those searches could possibly be incredible.

Sasha Luccioni, a researcher on the AI company Hugging Face who studies the environmental impact of those technologies, recently discussed the sharp increase in energy consumption SGE might trigger.

Luccioni and her team estimate that generating search information with AI could require 30 times as much energy as a traditional search.

“It just is sensible, right? While a secular search query finds existing data from the Internet, applications like AI Overviews must create entirely latest information,” she told Scientific American.

In 2023, Luccioni and her colleagues found that training the LLM BLOOM emitted greenhouse gases comparable to 19 kilograms of CO2 per day of use, or the quantity generated by driving 49 miles in a median gas-powered automobile. They also found that generating just two images using AI can devour as much energy as fully charging a median smartphone.

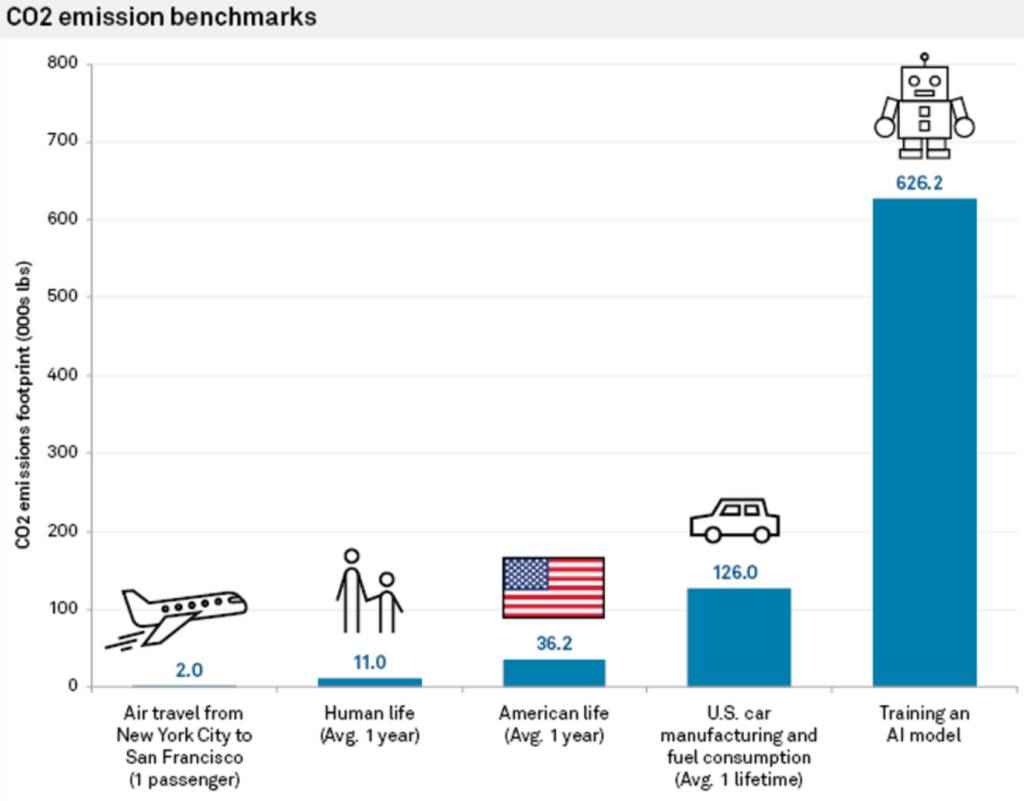

Previous studies have also assessed the CO2 emissions related to AI model training, which could exceed the emissions of a whole bunch of economic flights or the typical automobile across its lifetime.

CO2 impact of coaching AI models. Source: MIT Technology Review.

CO2 impact of coaching AI models. Source: MIT Technology Review.

In an interview with Reuters last yr, John Hennessy, chair of Google’s parent company, Alphabet, himself admitted to the increased costs related to AI-powered search.

“An exchange with a big language model could cost ten times greater than a standard search,” he stated, although he predicted costs to diminish because the models are fine-tuned.

AI search’s strain on infrastructure and resources

Data centers housing AI servers are projected to double their energy consumption by 2026, potentially using as much power as a small country.

With chip manufacturers like NVIDIA rolling out greater, more powerful chips, it could soon take the equivalent of multiple nuclear power stations to run large-scale AI workloads.

When AI corporations reply to questions on how this may be sustained, they typically quote renewables’ increased efficiency and capability and improved power efficiency of AI hardware.

However, the transition to renewable energy sources for data centers is proving to be slow and complicated.

As Shaolei Ren, a pc engineer on the University of California, Riverside, who studies sustainable AI, explained, “There’s a supply and demand mismatch for renewable energy. The intermittent nature of renewable energy production often fails to match the constant, stable power required by data centers.”

Because of this mismatch, fossil fuel plants are being kept online longer than planned in areas with high concentrations of tech infrastructure.

Another solution to energy woes lies in energy-efficient AI hardware. NVIDIA’s latest Blackwell chip is persistently more energy-efficient than its predecessors, and other corporations like Delta are working on efficient data center hardware.

Rama Ramakrishnan, an MIT Sloan School of Management professor, explained that while the variety of searches going through LLMs is more likely to increase, the fee per query seems to diminish as corporations work to make hardware and software more efficient.

But will that be enough to offset increasing energy demands? “It’s difficult to predict,” Ramakrishnan says. “My guess is that it’s probably going to go up, but it surely’s probably not going to go up dramatically.”

As the AI race heats up, mitigating environmental impacts has grow to be essential. Necessity is the mother of invention, and tech corporations are under pressure to create solutions to maintain AI’s momentum rolling.

Energy-efficient hardware, renewables, and even fusion power can pave the approach to a more sustainable future for AI, however the journey is suffering from uncertainty.

SGE could strain water supplies, too

We may speculate in regards to the water demands created by SGE, which is able to mirror increases in data center water consumption attributed to the generative AI industry.

According to recent Microsoft environmental reports, water consumption has rocketed by as much as 50% in some regions, with the Las Vegas data center water consumption doubling since 2022. Google’s reports also registered a 20% increase in data center water expenditure in 2023 in comparison with 2022.

Ren attributes nearly all of this growth to AI, stating, “It’s fair to say nearly all of the expansion is as a result of AI, including Microsoft’s heavy investment in generative AI and partnership with OpenAI.”

Ren estimated that every interaction with ChatGPT, consisting of 5 to 50 prompts, consumes a staggering 500ml of water.

In a paper published in 2023, Ren’s team wrote, “The global AI demand could also be accountable for 4.2 – 6.6 billion cubic meters of water withdrawal in 2027, which is greater than the entire annual water withdrawal of 4 – 6 Denmark or half of the United Kingdom.”

Using Ren’s research, we will create some napkin calculations for a way Google’s SGE might factor into these predictions.

Let’s say Google processes a median of 8.5 billion day by day searches worldwide. Assuming that even a fraction of those searches, say 10%, utilize SGE and generate AI-powered responses with a median of fifty words per response, the water consumption could possibly be phenomenal.

Using Ren’s estimate of 500 milliliters of water per 5 to 50 prompts, we will roughly calculate that 850 million SGE-powered searches (10% of Google’s day by day searches) would devour roughly 85 billion milliliters or 85 million liters of water day by day.

This is comparable to the day by day water consumption of a city with a population of over 500,000 people on daily basis.

In reality, actual water consumption may vary depending on aspects corresponding to the efficiency of Google’s data centers and the precise implementation and scale of SGE.

Nevertheless, it’s very reasonable to take a position that SGE and other types of AI search will further ramp up AI’s resource usage.

How the industry reacts will determine whether global AI experiences like SGE may be sustainable at a large scale.