Victorian MP Georgie Purcell recently spoke out against a digitally edited image within the news media that had altered her body and partially removed a few of her clothing.

Whether or not the editing was assisted by artificial intelligence (AI), her experience demonstrates the potential sexist, discriminatory and gender-based harms that may occur when these technologies are used unchecked.

Purcell’s experience also reflects a disturbing trend through which images, particularly of ladies and girls, are being sexualised, “deepfaked” and “nudified” without the person’s knowledge or consent.

What’s AI got to do with it?

The term AI can include a big selection of computer software and smartphone apps that use some level of automated processing.

While science fiction might lead us to think otherwise, much of the on a regular basis use of AI-assisted tools is comparatively easy. We teach a pc program or smartphone application what we would like it to do, it learns from the info we feed it, and it applies this learning to perform the duty in various ways.

An issue with AI image editing is that these tools depend on the knowledge our human society has generated. It is not any accident that instructing a tool to edit a photograph of a girl might lead to it making the topic look younger, slimmer and/or curvier, and even less clothed. A straightforward web seek for “women” will quickly reveal that these are the qualities our society incessantly endorses.

While digitally retouching real photos has been happening for years, fake images of ladies are on the rise.

Shutterstock

Similar problems have emerged in AI facial recognition tools which have misidentified suspects in criminal investigations as a result of the racial and gender bias that’s built into the software. The ghosts of sexism and racism, it seems, are actually within the machines.

Technology reflects back to us the disrespect, inequality, discrimination and – within the treatment of Purcell – overt sexism that we ourselves have already circulated.

Sexualised deepfakes

While anyone is usually a victim of AI-facilitated image-based abuse, or sexualised deepfakes, it is not any secret that there are gender inequalities in pornographic imagery found online.

Sensity AI (formerly Deeptrace Labs) has reported on online deepfake videos since December 2018 and consistently found that 90–95% of them are non-consensual pornography. About 90% of them are of ladies.

Young women, children and youths across the globe are also being subjected to the non-consensual creation and sharing of sexualised and nudified deepfake imagery. Recent reports of faked sexualised images of teenage girls have emerged from a New Jersey highschool within the United States and one other highschool in Almendralejo, Spain. A 3rd instance was reported in a London highschool, which contributed to a 14-year-old girl taking her own life.

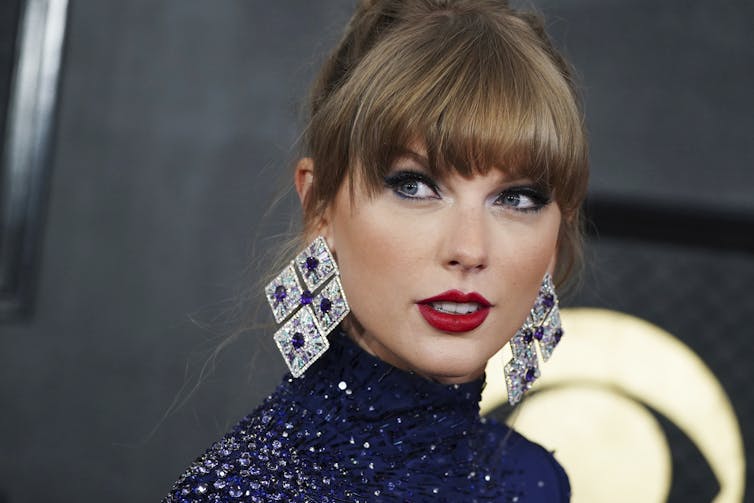

Women celebrities are also a well-liked goal of sexualised deepfake imagery. Just last month, sexualised deepfakes of Taylor Swift were openly shared across a variety of digital platforms and web sites without consent.

While research data on broader victimisation and perpetration rates of this kind of image editing and distribution is sparse, our 2019 survey across the United Kingdom, Australia and New Zealand found 14.1% of respondents aged between 16 and 84 had experienced someone creating, distributing or threatening to distribute a digitally altered image representing them in a sexualised way.

Our research also shone light on the harms of this manner of abuse. Victims reported experiencing psychological, social, physical, economic, and existential trauma, just like harms identified by victims of other types of sexual violence and image-based abuse.

This 12 months, we’ve begun a brand new study to further explore the problem. We’ll take a look at current victimisation and perpetration rates, the implications and harms of the non-consensual creation and sharing of sexualised deepfakes across the US, UK and Australia. We need to learn how we are able to improve responses, interventions and prevention.

How can we end AI-facilitated abuse?

The abuse of Swift in such a public forum has reignited a call for federal laws and platform regulations, moderation and community standards to stop and block sexualised deepfakes from being shared.

Stunningly, while the non-consensual sharing of sexualised deepfakes is already against the law in most Australian states and territories, the laws referring to their creation are less consistent. And within the US, there isn’t any national law criminalising sexualised deepfakes. Fewer than half of US states have one, and state laws are highly variable in how much they protect and support victims.

Sexualised deepfake images of Taylor Swift were circulated widely online.

Jordan Strauss/AP

But specializing in individual states or countries shouldn’t be sufficient to tackle this global problem. Sexualised deepfakes and AI-generated content are perpetrated internationally, highlighting the necessity for collective global motion.

There is a few hope we are able to learn to higher detect AI-generated content through guidance in spotting fakes. But the truth is that technologies are continuously improving, so our abilities to distinguish the “real” from the digitally “faked” are increasingly limited.

The advances in technology are compounded by the increasing availability of “nudify” or “remove clothing” apps on various platforms and app stores, that are commonly advertised online. Such apps further normalise the sexist treatment and objectification of ladies, with no regard for a way the victims themselves may feel about it.

But it could be a mistake guilty technology alone for the harms, or the sexism, disrespect and abuse that flows from it. Technology is morally neutral. It can take neither credit nor blame.

Instead, there’s a transparent onus on technology developers, digital platforms and web sites to be more proactive by constructing in safety by design. In other words, putting user safety and rights front and centre within the design and development of online services. Platforms, apps and web sites also have to be made accountable for proactively stopping, disrupting and removing non-consensual content and technologies that could make such content.

Australia is leading the best way with this through the Office of the eSafety Commissioner, including national laws that hold digital platforms to account.

But further global motion and collaboration is required if we truly want to handle and stop the harms of non-consensual sexualised deepfakes.