Today, Federal Minister for Industry and Science Ed Husic announced an interim response from the Australian Government on the secure and responsible use of artificial intelligence (AI).

The public, particularly the Australian public, has real concerns about AI. And it is suitable that they need to.

AI is a robust technology that’s quickly making its way into our lives. By 2030, it could grow Australia's economy by 40% and increase our annual gross domestic product by A$600 billion. A recent report from the International Monetary Fund estimates that AI could also impact 40% of jobs worldwide and 60% of jobs in developed countries akin to Australia.

In half of those jobs, the impact will probably be positive, increasing productivity and reducing drudgery. But in the opposite half, the results may be negative and take away jobs and even disappear altogether. Just as elevator attendants and clerks in typing offices had to maneuver away and find recent careers, truck drivers and court reporters also had to maneuver.

So it's perhaps no surprise that Australia was the nation most anxious about AI in a recent survey of 31 countries by market researcher Ipsos. Some 69% of Australians, in comparison with just 23% of Japanese, were concerned in regards to the use of AI. And only 20% of us believed it could improve the job market.

The Australian Government's recent interim response is subsequently to be welcomed. It is a somewhat late response to last 12 months's public consultation on AI. Over 500 submissions were received from business, civil society and science. I contributed to several of those submissions.

Industry Minister Ed Husic speaks to the media at a press conference at Parliament House in Canberra on Wednesday, January 17, 2024.

AAP Image/Mick Tsikas

What are the most important points of the federal government's response to AI?

Like any good plan, the federal government's response has three pillars.

First, there’s a plan to work with industry to develop voluntary AI safety standards. Secondly, it also plans to work with industry to develop options for voluntary labeling and watermarking of AI-generated materials. Finally, the federal government will establish an authority panel to “support the event of options for mandatory AI guardrails.”

These are all good ideas. The International Organization for Standardization has been working on AI standards for several years. Standards Australia, for instance, has just helped launch a brand new international standard that supports the responsible development of AI management systems.

An industry group consisting of Microsoft, Adobe, Nikon and Leica has developed open tools for labeling and watermarking digital content. Look for the brand new Content Credentials logo to seem on digital content.

And the New South Wales government has already arrange an 11-member expert advisory committee in 2021 to advise it on the suitable use of artificial intelligence.

OpenAI's ChatGPT is one among the most important language model applications that has raised concerns about copyright and mass production of AI-generated content.

Mojahid Mottakin/Unsplash

A bit late?

It is subsequently difficult not to come back to the conclusion that the Federal Government's latest response is a bit poor and a bit late.

Over half of the world's democracies will probably be allowed to vote this 12 months. Over 4 billion people will go to the polls. And we’ll see how AI will transform these elections.

We have already seen using deepfakes in recent elections in Argentina and Slovakia. The Republican Party within the US has released a campaign ad that uses fully AI-generated images.

Are we prepared for a world where every part you see or hear could possibly be fake? And will voluntary guidelines be enough to guard the integrity of those elections? Unfortunately, many tech corporations are cutting staff on this area, right once they are needed most.

The European Union has been on the forefront of regulating AI – it began drafting regulations back in 2020. And we’re still a couple of 12 months away from the EU AI law coming into force. This highlights how far behind Australia is.

A risk-based approach

Like the EU, the Australian government also proposes a risk-based approach in its preliminary response. There are many harmless uses of AI that cause little cause for concern. For example, you're prone to receive far fewer spam emails because of AI filters. And there’s no use for regulation to be certain that these AI filters do their job properly.

But there are other areas, akin to the judiciary and police, where the impact of AI could possibly be more problematic. What happens when AI discriminates against who’s interviewed for a job? Or will biases in facial recognition technologies result in more Indigenous people being falsely imprisoned?

The interim response identifies such risks but takes few concrete steps to avoid them.

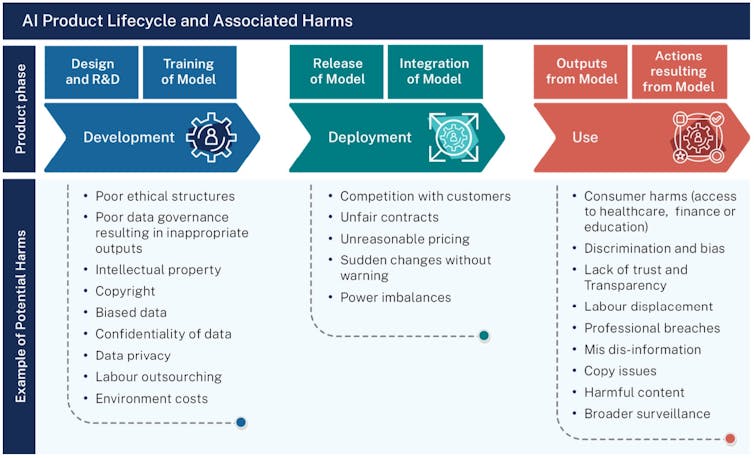

Diagram of impacts across the AI lifecycle, as summarized within the Australian Government's interim response.

Australian Government

However, the most important risk that the report doesn’t address is the danger of missing out. AI is a terrific opportunity, as great or greater than the Internet.

When the UK government released an analogous report on AI risks last 12 months, it addressed that risk by announcing an extra £1 billion (A$1.9 billion) of investment, adding to greater than £1 billion in previous ones investments were added.

The Australian government has up to now announced lower than A$200 million. Our economy and population is around a 3rd the dimensions of the UK. However, the investment up to now has been twenty times less. We risk missing the boat.