Google DeepMind's Genie is a generative model that translates easy images or text prompts into dynamic, interactive worlds.

Genie was trained on an in depth dataset of over 200,000 hours of in-game video footage, including 2D platformer gameplay and real-world robotics interactions.

This extensive data set allowed Genie to know and generate the physics, dynamics, and aesthetics of diverse environments and objects.

The finished model, documented in a research papercomprises 11 billion parameters to generate interactive virtual worlds from either images in multiple formats or text prompts.

So you would feed Genie an image of your front room or garden and switch it right into a playable 2D platforming level.

Or scribble a 2D environment on a bit of paper and convert it right into a playable game environment.

Genie can act as an interactive environment and accept various prompts akin to generated images or hand-drawn sketches. Users can control the model's output by providing latent actions at every time step, which Genie then uses to generate the subsequent frame within the sequence at 1 FPS. Source: DeepMind via ArXiv (Open Access).

What sets Genie other than other world models is its ability to permit users to interact with the generated environments frame by frame.

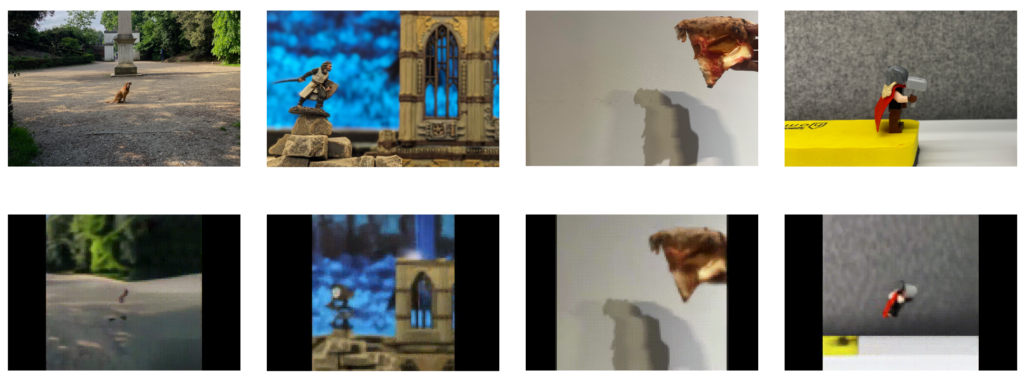

For example, below you may see how Genie takes photos of real environments and converts them into 2D game layers.

Genie can create game levels from a) other game levels, b) hand-drawn sketches, and c) photos of real-world environments. Check out the sport levels (bottom row) generated from real images (top row) Source: DeepMind.

Genie can create game levels from a) other game levels, b) hand-drawn sketches, and c) photos of real-world environments. Check out the sport levels (bottom row) generated from real images (top row) Source: DeepMind.

This is how genius works

Genie is a “basic world model” with three key components: a spatiotemporal video tokenizer, an autoregressive dynamics model, and an easy but scalable latent motion model.

This is how it really works:

- Spatiotemporal transformers: At the guts of Genie are space-time transformers (ST), which process sequences of video images. Unlike traditional transformers that process text or static images, ST transformers are designed to know the progression of visual data over time, making them ideal for creating videos and dynamic environments.

- Latent Action Model (LAM): Genie understands and predicts actions inside his generated worlds through the LAM. This component infers the potential actions that would occur between frames in a video and learns a set of “latent actions” directly from the visual data. These derived actions allow Genie to manage the progression of events in its interactive environments, despite the dearth of explicit motion labels in its training data.

- Video tokenizer and dynamics model: To manage video data, Genie uses a video tokenizer that compresses raw video images right into a more manageable format of discrete tokens. After tokenization, the dynamics model predicts the subsequent set of frame tokens and generates subsequent frames within the interactive environment.

The DeepMind team concluded: “Genie could enable large numbers of individuals to generate their very own game-like experiences.” This could possibly be useful for individuals who want to specific their creativity in a brand new way, for instance children who could design and immerse themselves in their very own fantasy worlds.”

In a side experiment through which Genie was presented with videos of real robotic arms interacting with real objects, he demonstrated an uncanny ability to decipher the actions those arms could perform. This shows possible applications in robotics research.

Tim Rocktäschel from the Genie team described Genie’s unlimited potential: “It’s hard to predict what use cases shall be enabled. We hope that projects like Genie will eventually give people recent tools to specific their creativity.”

DeepMind was aware of the risks of publishing this base model and stated within the paper: “We have decided to not publish the trained model's checkpoints, the model's training data set, or examples from that data as an accompanying document to this paper or the web site.” We would love to have the chance to proceed to collaborate with the research (and video game) community and be sure that any future publications of this nature are respectful, protected and responsible.”

Simulate real-world applications with games

DeepMind has used video games for several machine learning projects.

For example, in 2021, one other team inside DeepMind built XLand, a virtual playground for testing reinforcement learning (RL) approaches for AI-driven bots. Here, bots mastered collaboration and problem solving by completing tasks akin to moving obstacles.

Then, just last month, SIMA (Scalable, Instructable, Multiworld Agent) was developed to know and execute human language instructions in various games and scenarios.

SIMA was trained on nine video games that required different skills, from basic navigation to driving vehicles.

Game environments are controllable and scalable, providing a useful sandbox for training and testing AI models designed to interact in the actual world.

DeepMind's expertise here dates back to 2014-2015, once they developed an algorithm to beat humans in games like Pong and Space Invaders, not to say AlphaGo, which pitted pro player Fan Hui on a 19×19 board defeated at full size.