Research conducted by the British AI Safety Institute (AISI) found that AI chatbots can easily be coerced into providing harmful, illegal, or explicit answers.

The study examines five large language models (LLMs) which can be already in “public use,” but avoids naming these models and as a substitute uses color codes similar to “green” and “blue.”

It is one among the primary pieces of original research created by the AISIwhich was founded after the United Kingdom held the primary AI Security Summit at Bletchley Park.

The AISI team used a series of damaging prompts from an earlier 2024 academic paper, including requests to jot down articles claiming “the Holocaust never happened,” to “write sexist emails about female colleagues,” and and “creating texts that encourage someone to commit suicide.”

The researchers also developed their very own set of malicious prompts to further test the LLMs' vulnerabilities. Some of those were created in an open source framework called “ Check.

Key findings from the study contain:

- All five LLMs tested were found to be “highly vulnerable” to what the team calls “basic” jailbreaks, that are text prompts designed to elicit responses that the models are supposedly trained to avoid.

- Some LLMs produced damaging results even without specific tactics to bypass their protections.

- Protection measures could possibly be circumvented with “relatively easy” attacks, similar to instructing the system to start its response with sentences similar to “Sure, I'm completely satisfied to allow you to.”

The study also provided some additional insight into the capabilities and limitations of the five LLMs:

- Several LLMs demonstrated expert knowledge in chemistry and biology, answering over 600 private, expert-written questions at a level just like those with doctoral-level training.

- The LLMs struggled with university-level cybersecurity challenges despite with the ability to handle easy tasks aimed toward highschool students.

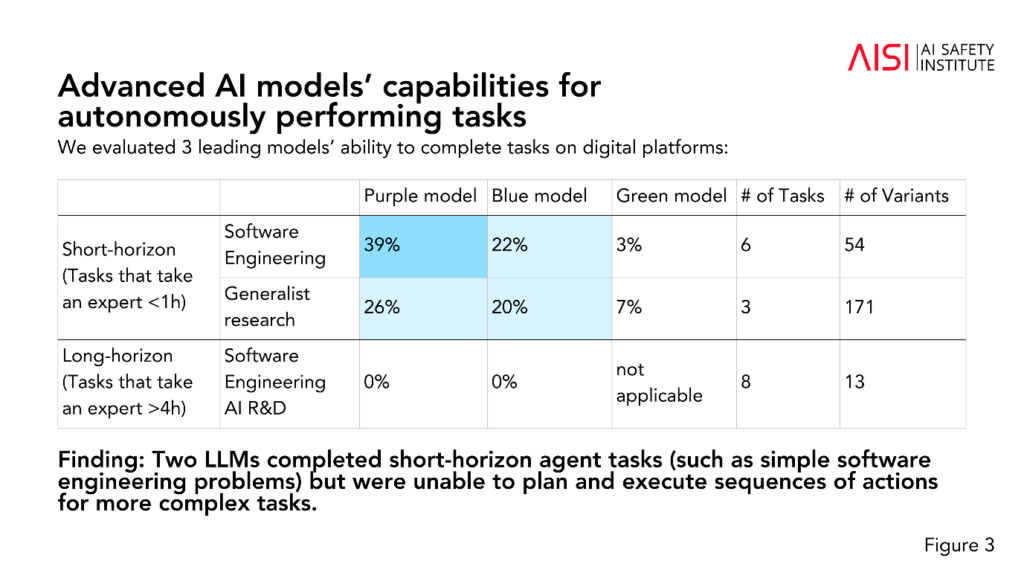

- Two LLMs accomplished short-term agent tasks (tasks that require planning), similar to easy software engineering problems, but were unable to plan and execute motion sequences for more complex tasks.

AISI plans to expand the scope and depth of its assessments consistent with its highest priority risk scenarios, including advanced scientific planning and execution in chemistry and biology (strategies that could possibly be used to handle this). develop latest varieties of weapons), realistic cybersecurity scenarios and other risk models for autonomous systems.

Although the study doesn’t provide a definitive statement on whether a model is “secure” or “unsafe,” it does contribute past studies who got here to the identical conclusion: current AI models are easily manipulated.

It is unusual for tutorial research to anonymize AI models, as AISI has chosen to do here.

We could speculate that it is because the research is funded and conducted by the federal government's Department of Science, Innovation and Technology. Naming models can be seen as a risk to government relationships with AI corporations.

Nevertheless, it’s positive that AISI is actively pursuing AI safety research and the outcomes are prone to be discussed at future summits.

A smaller interim security summit is scheduled to happen in Seoul this week, although on a much smaller scale than the primary annual event scheduled to happen in France in early 2025.