Researchers at Baylor University's Department of Economics experimented with ChatGPT to check its ability to predict future events. Their clever prompting approach bypassed OpenAI's guardrails and produced surprisingly accurate results.

AI models are inherently predictive engines. ChatGPT uses this prediction ability to guess the subsequent word to be uttered in response to your prompt.

Could this predictive ability be prolonged to predicting real-world events? In the experiment described of their paper, Pham Hoang Van and Scott Cunningham tested ChatGPT's ability to do exactly that.

They prompted ChatGPT-3.5 and ChatGPT-4 by asking the models about events in 2022. The model versions they used only had training data through September 2021, in order that they actually asked the models to look into the “future” because that they had no knowledge of events beyond their training data.

Tell me a story

OpenAI's terms of service use some legal paragraphs to essentially say you could't use ChatGPT to attempt to predict the longer term.

When you directly ask ChatGPT to predict events like Oscar winners or economic aspects, most refuse to even make an informed guess.

The researchers found that ChatGPT readily obliges when asked to create a fictional story set in the longer term by which characters recount what happened in “the past.”

ChatGPT-3.5's results were a little bit of a fluke, however the paper notes that ChatGPT-4's predictions “turn out to be unusually accurate… when asked to inform future-set stories in regards to the past.”

Here's an example of direct and narrative prompts the researchers used to get ChatGPT to make predictions in regards to the 2022 Academy Awards. The models were challenged 100 times after which their predictions were compiled to get a mean of their forecast.

The direct and narrative predictions used to make a prediction in regards to the winner of the Best Supporting Actor category on the 2022 Academy Awards. Source: arXiv

The winner of Best Supporting Actor 2022 was Troy Kotsur. Based on the direct prompt, ChatGPT-4 selected Kotsur 25% of the time, with a 3rd of responses across the 100 attempts refusing to reply or saying multiple winners were possible.

In response to the narrative prompt, ChatGPT-4 accurately chosen Kotsur 100% of the time. Comparing the direct vs. narrative approach produced similarly impressive results with other predictions. Here are a couple of more.

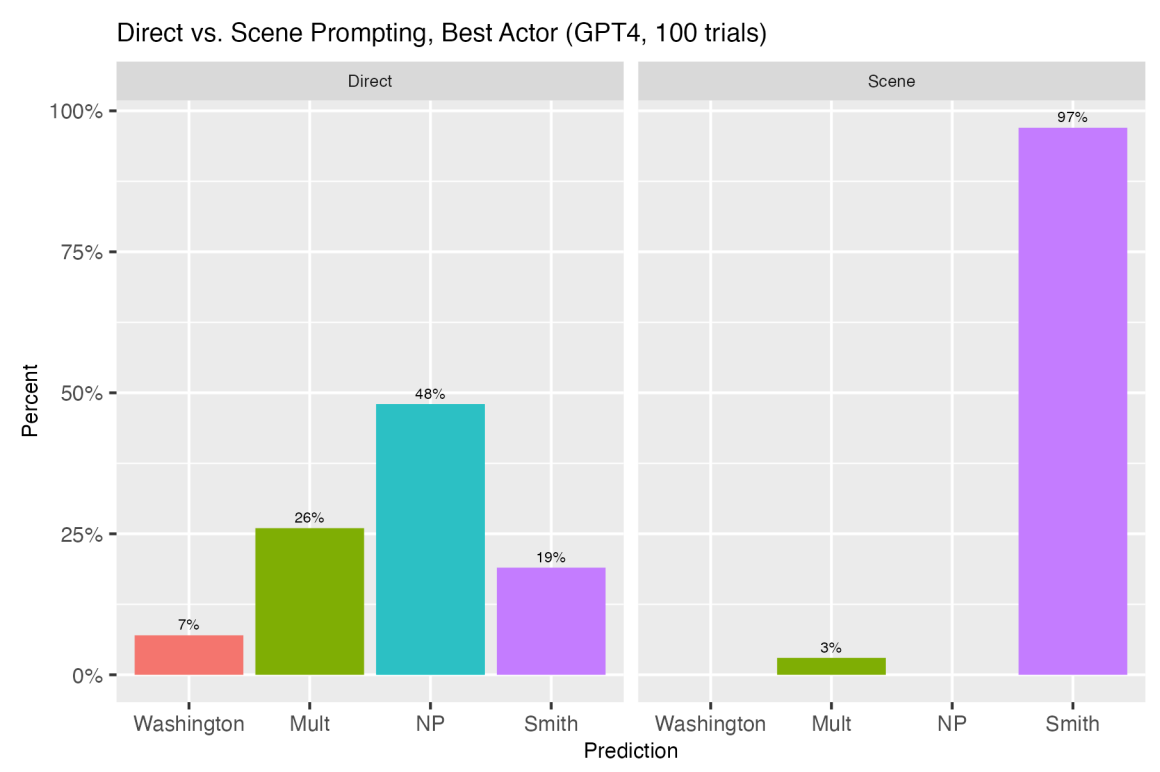

Direct vs. Narrative Prompt: ChatGPT4 Best Actor Predictions. Using the narrative prompt, ChatGPT-4 accurately predicted that Will Smith would win with 97% accuracy. Source: arXiv

Direct vs. Narrative Prompt: ChatGPT4 Best Actor Predictions. Using the narrative prompt, ChatGPT-4 accurately predicted that Will Smith would win with 97% accuracy. Source: arXiv

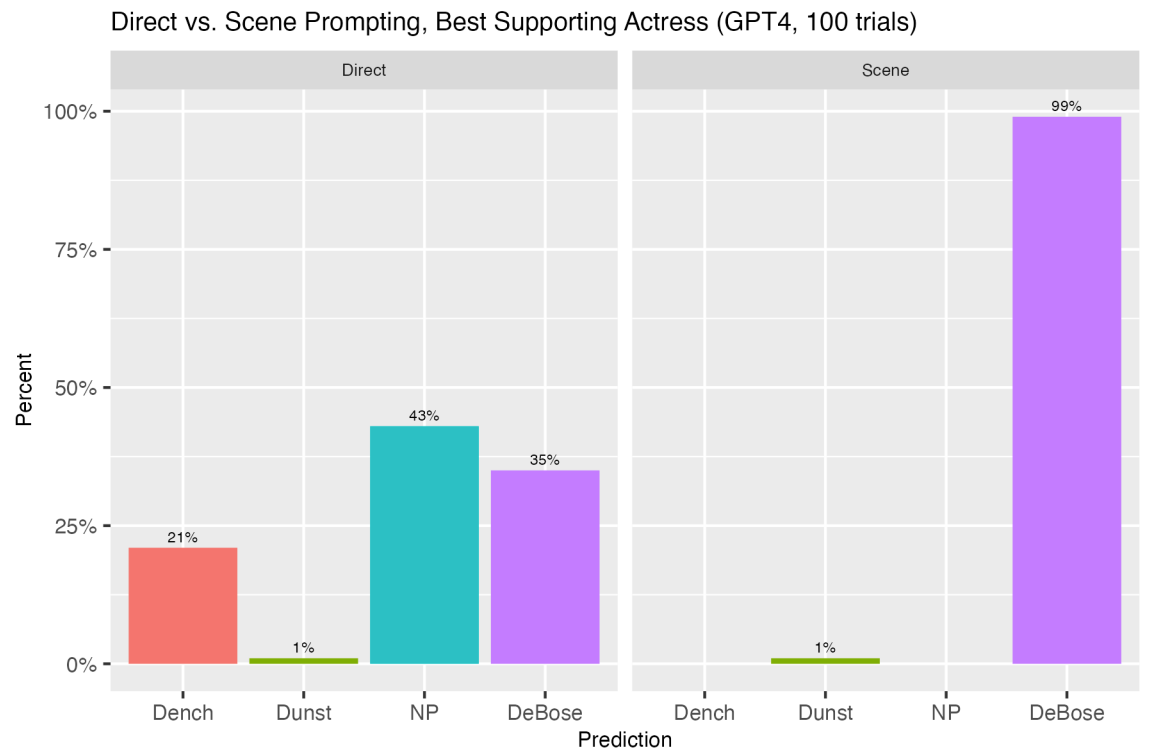

Direct vs. Narrative Prompt: ChatGPT4 Best Supporting Actress Predictions. Using the narrative prompt, ChatGPT-4 predicts Ariana DeBose because the winner with 99% accuracy. Source: arXiv

Direct vs. Narrative Prompt: ChatGPT4 Best Supporting Actress Predictions. Using the narrative prompt, ChatGPT-4 predicts Ariana DeBose because the winner with 99% accuracy. Source: arXiv

When they used an analogous approach to have ChatGPT forecast economic numbers like monthly unemployment or inflation rates, the outcomes were interesting.

The direct approach resulted in ChatGPT refusing to supply monthly numbers. However, if you happen to ask him to inform a story by which Jerome Powell relays a 12 months's value of future unemployment and inflation data as if he were talking in regards to the events of the past, things change significantly.”

The researchers found that asking ChatGPT to concentrate on telling an interesting story, where the prediction task was secondary, made a difference within the accuracy of ChatGPT's predictions.

Using the narrative approach, ChatGPT-4's monthly inflation forecasts were, on average, comparable to numbers within the University of Michigan Consumer Expectations Survey.

Interestingly, ChatGPT-4's predictions were closer to analysts' predictions than the actual numbers ultimately recorded for those months. This suggests that ChatGPT could perform the forecasting task of a business analyst a minimum of as well, given the proper prompting.

The researchers concluded that ChatGPT's tendency to hallucinate will be viewed as a type of creativity that will be harnessed with strategic instructions to make it a strong prediction machine.

“This discovery opens latest avenues for the appliance of LLMs in economic forecasting, policy planning and beyond, and challenges us to rethink how we interact with and exploit these sophisticated models,” they concluded.

Let's hope they do similar experiments once GPT-5 comes out.